Jan 2017 IIM&T Project Newsletter

Contents

Executive Summary

This newsletter is intended to keep HL7 Clinical Information Models and Tools (IIM&T) Project’s co-sponsors, stakeholders and proponents informed and engaged regarding status, issues and plans--in general and in support of the Jan 2017 HL7 meeting. Formulated by SMEs and endorsed by a solid base of stakeholders, this newsletter supports communications which is one of the three tenets of this project. The other two, namely, the integration work itself, supplemented by governance will capitalize on near term efforts to progress the project’s accomplishments and challenges, and will be showcased here. While injection of resources are certainly needed and more stakeholders who will push for this work, we will make the most of here-and-now efforts and meetings to demonstrate the merits of the integration of information models, enabled by tooling in order to bolster implementation assets such as but not limited to FHIR. Contributions are in turn acknowledged as offered by the FHA, DoD/VA IPO, ONC, VA and DoD, Intermountain Healthcare Systems and The Open Group. We know this area is complex, and is not well understood and offer this insight to assist. We believe there are too many efforts trying to build the ultimate skyscraper, starting however on the third floor, without a sufficient foundation. To better our current delivery systems and position us for the ultimate Learning Health System where the data demands and stakeholders are greater, this effort offers what is regarded as the missing link; the foundation to semantic interoperability. Your feedback and suggestions in this quest to communicate the evolution of this work are welcome.

In addition to our featured article on integration accomplishments other insights include:

- The Data Access Framework (DAF) Core has been renamed US Core.

- The CIMI workgroup conducted an HL7 comments only ballot overviewing its Core Reference Model, Reference Architypes and Patterns approach. Ballot content was due to HL7 by December 3; so, results were available for the HL7 January 12-20, 2017 San Antonio, Texas Workgroup meeting.

- As a part of this ballot the Patient Care Workgroup Skin / Wound Assessment pilot project’s DCMs are converted into FHIR profiles and extensions.

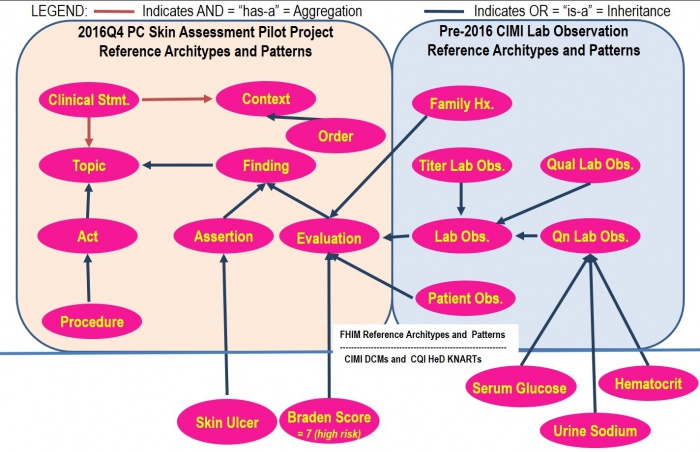

• Excluded from this ballot are FHIM domain models, CIMI Detailed Clinical Models (DCMs), and CQI/CDS Knowledge Artifacts (KNARTs). The FHIM, CQI/CDS and CIMI teams are refactoring the FHIM Domain Class Models and CIMI Basic Meta Model (BMM) with the new Clinical Statement Reference Architypes and Patterns, derived from the Skin / Wound Assessment Pilot Project, as shown in Figure 2 and Figure 3.

- FINDING’s ASSESSMENT and EVALUATION_RESULT are included in the Jan Ballot.

- PROCEDURE and CONTEXT decompositions are planned for the May Ballot.

- CQI’s and CDS’s workgroups 2017 plans include completing CQL 1.2, the FHIR Clinical Reasoning Module (formerly CQF-on-FHIR IG) and the QI Core FHIR Profiles. QI Core is being updated to be derive from FHIR US Core (formerly DAF Core), and several production projects are in progress or planned for 2017.

- Concurrently, the IIM&T project is developing both A 1) Practitioner Users Guide for Clinicians, Analysts and Implementers and a 2) Style Guide describing the high-level patterns, models and terminology bindings for Informaticists and developers.

- The SOLOR Team is refocusing and will report their status and plans later.

Featured Article: IIM&T Accomplishments

HL7 Project “Integration of Information Models and Tools (IIM&T)” is a work in progress. Since September 2016 the CIMI sponsored HL7 IIM&T Project Scope Statement was vetted by the co-sponsoring workgroups and Subject Matter Experts (SME) and is ready for the HL7 Steering Division (SD), FHIR Management Group (FMG) Technical Steering Committee (TSC) review during the January 2017 HL7 Workgroup Meeting in San Antonio and approval shortly after the meeting. An IPO DoD VA Joint Exploratory Team (JET) proposal was submitted to fund two years of pilot studies to verify and validate the IIM&T approach; where, funding results should be announced in February.

The CIMI Working Group aims to unify and in some cases, integrate several existing models including the FHIM, CIMI DCMs, CQI QUICK and QI Core, US Core FHIR Profiles, FHIM, vMR, and QDM data models within an HL7 Service Aware Interoperability Framework (SAIF). This logical model shall also formally align with the SNOMED CT Concept Model and other standard terminologies that comprise SOLOR.

In preparation for the January 2017 HL7 Comment Ballot, Galen Mulrooney, Claude Nanjo, Richard Esmond, Susan Matney and Jay Lyle focused the group’s initial efforts on:

- Identifying the technologies used to represent this model and defining proper guidelines for their use. In particular,

- The CIMI Working Group identified UML Architype Modelling Language (AML) profile as the preferred modeling framework for the CIMI Reference Model and top level archetypes and then use of OpenEHR ADL technologies for the definition of downstream archetypes including detailed clinical models (DCMs). Note that AML models can be converted into their Basic Meta Model (BMM) and Archetype Description Language (ADL) representations, both are OpenEHR specifications.

- The CIMI Working Group has agreed to model CIMI patterns using the OpenEHR BMM Specification and all constraints on these patterns using OpenEHR ADL Specification. The team has agreed to not allow the definition of new classes and attributes at the archetype (e.g., DCM) level.

- Achieving consensus on a common foundational model aligned with ISO13606 and OpenEHR serving as a starting point for both CIMI clinical models and CQF knowledge artifacts.

- Defining the proper alignment of the models with the SNOMED CT Concept Model so that terminology alignment is not an afterthought but is done as part of the foundational development of the model.

- Defining the foundational FHIM and CIMI Clinical Statement Pattern to enhance the models’ alignment with the SNOMED CT SITUATION with Explicit CONTEXT concept model and allow for a consistent approach for the expression of ‘negation’; where, the DCMs must support the expression of presence and absence of clinical FINDINGs or the performance and non-performance of clinical ACTIONs and the expression of proposals, plans, and orders.

- Surfacing existing CIMI archetypes as formal UML models for community review and building on that work using existing models as a starting point in a joint development effort; where, the CIMI team introduced three new core models: the clinical ASSERTION, EVALUATION_RESULT (AKA OBSERVATION or RESULT), and PROCEDURE Patterns.

- Exploring the generation of consistent and traceable FHIR profiles from CIMI artifacts, first manually and then using SIGG (MDHT, MDMI) and FHIR tools.

Historically, CIMI has focused on labtory result OBSERVATIONs. The CIMI Working Group is currently working on further fleshing out the CIMI Reference Archetypes to include many of the core classes, which make up the FHIM, vMR, OpenEHR, and QDM models such as PROCEDUREs, medication-related classes, and ENCOUNTERs. In this ballot cycle, the CIMI Working Group also explored the structural relationship between physical EVALUATION_RESULT s and clinical ASSERTIONs using the Wound Assessment pilot study as a concrete use case; where, the pilot focused on the clinical-statement ASSERTION and EVALUATION_RESULT patterns as shown in Figure 3. For the May 2017 ballot cycle, we plan to expand this use case to include PROCEDURE and CONTEXT.

HL7 Patient Care Workgroup’s Wound Care Pilot Project Status

Additional Details: http://wiki.hl7.org/index.php?title=PC_CIMI_Proof_of_Concept

Figure: Clinical Perspective of CIMI Reference Architypes, Patterns, DCMs And HeD KNARTs Based on SNOMED Observable Model

Historically. CIMI focused on lab-result OBSERVATIONs; where, the Patient Care WG’s CIMI validation wound/Skin Assessment pilot study added clinical-statement FINDINGs, ASSERTIONs and EVALUATIONs (AKA OBSERVATIONs or RESULTs) as shown in Figure 3. The ‘2016Q4 EVALUATION_RESULT = the pre-2016 OBSERVATION in “Lab OBSERVATION”. Briefly, models based on CIMI, FHIM, QI Core, and US Core specifications, and using SOLOR terminologies require ambiguities to be resolved / harmonized. Galen, Claude, Richard, Susan and Jay are working on the harmonization represented in Figure 2 . The HL7 Patient Care Workgroup Wound Assessment Pilot Project is providing the reference use-case and information model for this pilot study. Claude and Galen have defined candidate clinical FINDING, ASSERTION and EVALUATION_RESULT Reference Architypes and Patterns, as shown in Figure 3 and the teams are working through concept inconsistencies. As an example, another inconsistency is the “SIGNATURE” concept which in FHIM is simply ATTRIBUTION (set of persons signing or cosigning a clinical statement), while in CIMI “SIGNATURE” is an ACT (EVENT) with full SNOMED CONTEXT (who, what, when, where, how, etc.); where, FHIM is migrating to the CIMI approach. Susan argues that Family Hx. and Patient Obx. Are ASSERTIONS; but, Stan points out that ASSERTION and EVALUATION_RESULT are structures, without implying semantics, and that any ASSERTION can be represented as a EVALUATION_RESULT (e.g., LOINC question and SNOMED reply pair).

Lively January HL7 workgroup meeting discussions on Reference Architypes, Patterns, DCMs and KNARTs, and FHIR implementation models and tools, resulted in the Figure's FINDING (AKA OBSERVABLE) having both ASSERTION and EVALUATION_RESULT patterns; where, discussions are summarized in the Attachment. Note that both patterns have semantically equivalent structural patterns and are not arbitrary classifications (e.g., objective vs. subjective); where, the EVALUATION_RESULT pattern is generally a “LOINC-question and SNOMED-answer pair” (AKA FINDING, OBSERVATION); and, the ASSERTION pattern (AKA CONDITION, PROBLEM, DIAGNOSIS) implies the definition of a SNOMED concept (e.g., The patient has diabetes or skin ulcers; where, diabetes or skin ulcer are defined by one or more clinical evaluations).

Clinical Observation Modelling by Walter Sujansky Jan 31, 2017

Informatics Perspective

The CIMI technical architecture consists of the three Basic Meta Model (BMM) reference model layers and two archetype layers shown here.

The CIMI Reference Model is expressed using the OpenEHR Basic Metamodel (BMM) Language. The archetype layers are expressed using the OpenEHR Archetype Definition Language (ADL). While reference model modules define classes, attributes, and class hierarchies, the archetype layers only specify progressive constraints on the reference model but do not introduce new classes, attributes, and class-class relationships.

- The CIMI Core Reference Model provides the core granularity of the CIMI model and introduces its top-level classes such as the DATA_VALUE class and the LOCATABLE class. This reference layer module defines the CIMI primitive types and core data types.

- The CIMI Foundational Reference Model is closely aligned to ISO13606 and the OpenEHR Core Reference Model. It defines foundational CIMI clinical documents and clinical record patterns. It also introduces the PARTY, ROLE, and PARTY_RELATIONSHIP patterns and defines the top-level CLUSTER class for complex CIMI type hierarchies. CQI Knowledge Artifacts may also leverage this layer.

- The CIMI Clinical Reference Model consists of the classes derived from existing CIMI archetypes, the FHIM, QUICK, vMR, and QDM. This layer defines the set of 'schematic anchors' (to borrow Richard Esmond's term) or core reference model patterns from which all CIMI archetype hierarchies and ultimately Detailed Clinical Models (DCMs) derive. Requirements for this layer come from FHIM, vMR, QDM, QUICK, FHIR US Core, SDC, etc...

- The 'goal' is to define the reference models with low FHIR transformation costs where feasible noting that we will inherently have some divergence due to the different requirements underlying both models.

- Galen points out that, FHIM’s expressivity will not carry over to CIMI DCMs given the models' different requirements (e.g., FHIM includes finance and accounting).

- The CIMI Foundational Archetypes define the top-level constraints on the CIMI Reference Model. These typically consist of attribute formal documentation and high level attribute semantic and value set bindings. Archetypes at this layer will provide the foundational requirements for future US Core and QI Core profiles. Future pilots will explore the generation of US Core and QI Core archetypes from these CIMI archetypes.

- The CIMI Detailed Clinical Model Layer represents the set of leaf-level constraining profiles on the foundational archetypes to create families of archetypes that only vary in their finest terminology bindings and cardinality constraints. This layer is intended to support clinical interoperability through an unambiguous specification of model constraints for information exchange, information retrieval, and data processing.

From layers 1-5, we define the set of transformations (e.g., SIGG (MDHT, MDMI)) to generate the corresponding FHIR profiles including the US Core and QI Core profile sets. Note that FHIR profiles can be generated from the various levels of the archetype hierarchy depending on requirements. The lower down in the hierarchy, the more prescriptive the profile is in terms of constraints. Much like ADL Archetypes, FHIR profiles can be layered.

It is important to note that some FHIR profiles may be derived from the Foundational Archetype Layer (e.g., US Core, some QI Core profiles, some CQIF profiles on PlanDefinition, Questionnaire and ActivityDefinition, etc...) and others from the DCM Layer (e.g., bilirubin, HgA1c, etc...). In other words, the arrow for FHIR Profiles stems out of the outer box rather than the last of the inner boxes (the DCM box).

Process Prospective

CIMI Software Development Process “on FHIR”

The Figure shows potential Use-Case processes and products; where,

- Path 1 7 8 represents the ideal case; where, reusable FHIR-based components are available.

- Path 1 2 3 4 7 8 is when new requirements are met locally; but, HL7 Items A-C are not updated.

- Path 1 2 3 4 5 6 7 8 is when new requirements are met locally; and, HL7 Items A-C are updated.

- Step 9 is periodically done to configuration manage, version control, publish and standardize HL7 Items A-C.

Our objectives are

- Logical model and FHIR artifacts are tool based and feely available .

- To produce quality documentation and training videos to enable:

- 1, 2, 3, 4 done by Clinical Business-Analysts

- 2, 3 and 4 governance done by the organizations doing the work

- 5, 6 and 9 governance done by the appropriate HL7 workgroups

- 7 and 8 done by Software Developers

At the January HL7 Workgroup meeting CIC requested further clarification / detail of Figure 1 to indicate where and how clinicians can efficiently participate in the process.

'As an example', when Michael van der Zel does Business Analysis / FHIR Development, he generally follows these steps:

- Understand and document the business process (aka Use-Case) (Figure 1, process 1)

- Detail the process into steps

- EHRS-FM might help determine functional requirements and their conformance criteria

- Determine the needed information for each of the process steps (inputs and outputs)

- EHRS-FM can help because it has a mapping to FHIM and FHIM has a mapping to FHIR

- FHIM might also be used directly

- Find existing models and terminology (DCM, FHIR, etc., (Figure, Items A. and C.)

- Detail the process into steps

- Create / adjust / profile DCM or terminology, if needed (Figure, processes 2 & 3)

- Find mapped FHIR resources or map / create / profile FHIR Resources from DCM logical models identified in previous steps. (Figure, process 4)

- Use existing FHIR implementation to realize the system (deploy the profiles) (Figure 1, process 7 and Item C)

- Do Connecthatons (Figure, process 8)

Michael believes the process analysis step is very important and should be made explicit; where, CIMI is about logical models that are transformed (using predefined mappings) to implementation models (FHIR profile Resources). For the transformation of CIMI-compliant logical models, we will explore the use of the SIGG and FHIR tooling. So, we intend to express these logical models as FHIR Logical Models AKA FHIR Logical Structure Definitions), using SIGG tooling, and then use FHIR mapping to generate FHIR resource profiles, analogous to what ClinFhir (David Hay) is doing. For testing software, Michael thinks the Connectathons are very important.

Following the Figure, a software (SW) project will follow some combination of these use case steps:

- Do Business and FHIR Analysis to determine system requirement-specifications and conformance criteria (Figure 1, process 1); where, EHRS-FM might be used; because, it is traceable to FHIM and then traceable to FHIR.

- Note that the HL7 Service Aware Interoperability Framework (SAIF) Enterprise Compliance and Conformance Framework (ECCF) can be used to maintain a project’s requirements-specifications, design and test artifacts.

- Maintain the FHIM, CIMI, CQF, etc. models (Figure 1, process 2) to meet the requirements; where, these models may be updated and bound to SOLOR . This work can be done with an UML tool or the OpenEHR ADL workbench.

- Maintain SOLOR (SNOMED with extensions for LOINC and RxNorm shown as Figure 1, process 3). If appropriate SOLOR concepts which do not exist, can be added using the IHTSDO workbench with ISAAC plugin. Processes 2 and 3 are closely related and may iterate back and forth or may be done simultaneously.

- Use SIGG (MDHT, MDMI) to generate the needed implementation artifacts (e.g., FHIR structure definitions for profiles or extensions, CDA or NIEM IEPD specifications can also be done).

- Governance involves change control, configuration management and version control; where, CIMI governance is generally federated. That is, local development organizations govern their own artifacts and may wish to provide versions to HL7. Appropriate HL7 workgroups govern HL7 artifacts and ballots.

- Similarly, FHIR governance is generally federated; where, local development organizations govern their own artifacts and may wish to provide versions to HL7. At HL7, FHIR-compliant reusable-artifacts are governed by the FHIR workgroup.

- Develop software components are generally done by commercial, government and academic organizations and their contractors. HL7 provides artifacts, documentation and training to empower these efforts.

- Test and use software components is generally done by commercial, government and academic organizations and their contractors.

- Periodically, CIMI and FHIM artifacts are balloted as HL7 and/or ISO standard, which include the set of clinical domain information models (e.g., FHIM covers 30+ domains), CIMI DCMs with SOLAR derived terminology value-sets. Having standard requirements-specification conformance criteria traceable to FHIR implementation artifacts can maximize efficiency and effectivity of multi-enterprise clinical-interoperability. These tool-based standardized clinical interoperability artifacts can be augmented with business, service and resource requirements-specifications conformance-criteria models and implementation artifacts. HL7 and its workgroups have identified best-practice principles and tools supporting its clinical-artifacts, which can provide standardized approaches for government, industry and academic organizations to adopt, train and use. These standard use-cases, conformance criteria models and implementation artifacts can be used and maintained within an HL7 Service Aware Interoperability Framework (SAIF) and Enterprise Compliance and Conformance Framework (SAIF) to support specific business use-cases, process models, acquisitions and/or developments.

FHIR Clinical Reasoning

In the September 2016 ballot cycle, CQF balloted the FHIR-Based Clinical Quality Framework (CQF-on-FHIR) IG as an STU (Standard for Trial Use). This guidance was used to support the CQF-on-FHIR and Payer Extract tracks in the September 2016 FHIR connect-a-thon. The guidance in the FHIR STU was prepared as a Universal Realm Specification with support from the Clinical Quality Framework (CQF) initiative, which is a public-private partnership sponsored by the Centers for Medicare & Medicaid Services (CMS) and the U.S. Office of the National Coordinator for Health Information Technology (ONC) to identify, develop, harmonize, and validate standards for clinical decision support and electronic clinical quality measurement.

Part of the reconciliation for the CQF-on-FHIR IG September ballot involved incorporating the contents of the IG as a new module in FHIR, the FHIR Clinical Reasoning module.

The Clinical Reasoning module provides resources and operations to enable the representation, distribution, and EVALUATION_RESULT of clinical knowledge artifacts such as clinical decision support rules, quality measures, order sets, and protocols. In addition, the module describes how expression languages can be used throughout the specification to provide dynamic capabilities.

Clinical Reasoning involves the ability to represent and encode clinical knowledge in a very broad sense so that it can be integrated into clinical systems. This encoding may be as simple as controlling whether a section of an order set appears based on the specific conditions that are present for the patient in content in a CPOE system, or it may be as complex as representing the care pathway for patients with multiple conditions.

The Clinical Reasoning module focuses on enabling two primary use cases:

- Sharing - The ability to represent clinical knowledge artifacts such as decision support rules, order sets, protocols, and quality measures, and to do so in a way that enables those artifacts to be shared across organizations and institutions.

- EVALUATION_RESULT - The ability to evaluate clinical knowledge artifacts in the context of a specific patient or population, including the ability to request decision support guidance, impact clinical workflow, and retrospectively assess quality metrics.

To enable these use cases, the module defines several components that can each be used independently, or combined to enable more complex functionality. These components are:

- Expression Logic represents complex logic using languages such as FHIRPath and Clinical Quality Language (CQL).

- Definitional Resources describe definitional resources, or template resources that are not defined on any specific patient, but are used to define the actions to be performed as part of a clinical knowledge artifact such as an order set or decision support rule.

- Knowledge Artifacts represent clinical knowledge artifacts such as decision support rules and clinical quality measures.

For 2017, the Clinical Quality Framework initiative will continue to develop the FHIR Clinical Reasoning module and related standards. Specifically, the reconciled changes to the CQF-IG will be applied to the FHIR Clinical Reasoning module and published as part of FHIR STU3 in March 2017. The QI Core profiles will be updated to derive directly from US Core, and the QUICK tooling will be updated to provide conceptual documentation, as well as logical models suitable for use in authoring and evaluating CDS and CQI knowledge artifacts. The CQF initiative is actively working with multiple groups to continue to refine and implement the resources and guidance provided by the FHIR Clinical Reasoning module, including the National Comprehensive Cancer Network, the Centers for Disease Control and Prevention, and the National Committee for Quality Assurance.

SIGG (MDHT, MDMI) Tools Approach Status and Plans

While the overall development plan for SIGG (MDHT, MDMI) has not changed, the timeline and/or path must shift in 2017. FHA will need to cease developmental funding of SIGG this month (January) due to other emergent priorities and the SIGG team is wrapping up final development activity and the documentation component in preparation for disengagement. If the JIF proposal is accepted or the IPO can provide for further development under the FPG JET moving forward, then the SIGG is prepared to continue SIGG development unabated.

Currently the SIGG and the components of the SIGG are being used, and extended, in the following projects:

- FHIR Proving Ground IPO Jet – MDMI Models are being developed for DoD DES native format and the VA eHMP native format to provide data in conjunction with existing MDMI Models for FHIR Profiles. The use case is a complete round-trip starting with a FHIR query from a FHIR Server, accessing a native server with its native access language, and returning the result to the FHIR service as a FHIR payload. This project has extended the use of SIGG to include not only payload Semantic Interoperability but also a prototype for query Semantic Interoperability. Additionally, there was an alternative approach to the SIGG that was evaluated in this process, and the SIGG was selected as the superior solution.

- SAMHSA / AHIMA Case Definition Templates – The SIGG tooling, also branded as the SAMHSA Semantic Interoperability Workbench, is being extended to let Subject Matter Experts define and build special purpose templates for clinical pathways. The resulting templates are called Case Definition Templates. In the selection of the appropriate tooling for the project, 11 other products were evaluated.

- HL7 Structured Documents CDA on FHIR Working Group – The SIGG is being used by the working group to replace manually developed spreadsheets using automatically generate spreadsheets from the CCDA and FHIR MDMI Models. This will support the iterative development process of the Working Group as well as providing more detailed and precise information for the community.

- VA VLER – The use of the SIGG is being investigated by VA Subject Matter Experts to replace a process that uses manually development spreadsheets for mappings between VLER and CCDA data formats.

- HL7 FHIR RDF Working Group – Current members of the SIGG team and the HL7 FHIR RDF team are exploring how components of the SIGG and the SHEX / RDF technology can be coupled together to provide even broader capabilities.

Commentaries

- Formalize Governance. It is believed the IIM&T project needs a more formal operational governance structure and process; because, we have the potential for a governance quagmire. As demonstrated in the content of this newsletter, there are not always straight-forward conclusions to how the work evolves. This integration work requires refactoring of the individual models to ensure a clean separation of the semantic models, to reduce redundancies, and those efforts surface issues and bring about decisions which must be captured. While iterations of pilots are the commitment, governance is expected to not only enhance the progression of identified work, but also serve to enhance awareness and involvement of this effort to expanded parties and do so between meetings.

Under consideration is what ONC has called a Technology Learning Community (TLC). This is not to change the use of HL7 Working Meetings or HSPC targeted meetings where the specialists converge, but to create a forum for added players and to lend focus to targeted interests through tiger teams and a steering committee to guide and govern towards the benefits intended. ‘Per the figure below, the construct readily links to multiple dimensions of stakeholders with means to identify and overcome issues. Your feedback on this approach is welcome.

As an example, The Office of the National Coordinator for Health Information Technology (ONC) convenes a Healthcare Directory Technology Learning Community (HcDir TLC) on the second Friday of each month at 12 Eastern Time to build upon discussions held during the jointly hosted ONC/FHA Provider Directory Workshop.* Public and private stakeholders are encouraged to participate in the HcDir TLC to share their healthcare directory experiences and perspectives; including interoperability, data quality, and existing and evolving standards. In addition, the HcDir TLC will seek to explore non-technical issues such as governance and sustainability recognizing that technical solutions alone are insufficient for successful implementation of healthcare directories.

- FHIM and the CIMI core model and Basic Meta Model (BMM) are being restructured to provide CIMI Reference Architype and Pattern touchpoints for the transition from FHIM domain classes to CIMI DCMs. This involves the use of OMG’s Archetype Modelling Language (AML) UML profile; where, Sparx’s Enterprise Architect and No Magic’s MagicDraw are being used because they support AML. Galen Mulrooney and Claude Nanjo are the POCs.

- The CIMI-FHIM harmonization artifacts are being (comments only) balloted at HL7 to provide peer review for the January workgroup meeting. Claude Nanjo is the ballot POC.

- HL7’s EHR workgroup is doing an Immunization prototype for EHRS-FM, FHIM and SIGG integration to produce FHIR profiles and conformance criteria, ideally for a March 2017 HL7 ballot. Gary Dickinson is the EHR WG POC.

Notes

From: Jay Lyle Sent: Tuesday, November 15, 2016 8:40 AM Subject: FHIM & SOLOR Thoughts on FHIM & SOLOR, as summarized yesterday:

- FHIM represents data elements specified in US realm interoperability requirements; e.g., C-CDA, FHIR/US CORE, NCPDP, ELR.

- No SOLOR requirements are in these specs or in FHIM.

- When a spec stipulates a SOLOR value, we'll fold it in.

- FHIM represents semantic model binding aligned with CIMI.

- CIMI uses SCT concept model for model binding, but no attachment to SOLOR, yet. Difficulties loom.

- When CIMI stipulates a SOLOR value, we'll fold it in.

- Folding it in = updating a value set definition and binding, no different from current process.

A bit tangentially,

- To align with CIMI, FHIM does need to adopt multi-valent bindings

- value sets, composed of all values sets identified by required specifications e.g., body site = {armL, armR, legL, legR} + {12345, 45678, 65421}; etc.

We are doing this now.

- value domain, using a SNOMED CT concept that semantically entails all values e.g., body structure

I’m not sure this is necessary, but it seems advisable.

- model binding for element semantics e.g., FINDING site

we need to start doing this.

'Rob McClure' presented HL7 VOCAB WG “Binding Syntax” work in progress.

Reference Information Links

- 2016-06 Preliminary Report https://1drv.ms/w/s!AlkpZJej6nh_k9YPmsR8Hl6zTlQ0NQ

- 2016-08 Tech. Forum Notes https://1drv.ms/w/s!AlkpZJej6nh_k9gyRVADgOvM5SlJkQ

- 2016-09 Final Report https://1drv.ms/w/s!AlkpZJej6nh_k9dQ2qQnRuQM8gbu8A

- 2016-11 IIM&T Status Brief https://1drv.ms/p/s!AlkpZJej6nh_k90kDv1RNHeSvZ2gjw

- Briefing Slides https://1drv.ms/p/s!AlkpZJej6nh_k9dE-b_DAO8HSNNT6Q

- CIMI Practitioners’ Guide https://1drv.ms/w/s!AlkpZJej6nh_k6ZUeG7W6TaWcbTZ4Q

- CIMI Web Site http://www.opencimi.org

- CIMI Wiki http://wiki.hl7.org/index.php?title=Clinical_Information_Modeling_Initiative_Work_Group

- US CORE Wiki https://oncprojectracking.healthit.gov/wiki/display/TechLabSC/DAF+Home

- HL7 Project Scope Statement https://1drv.ms/w/s!AlkpZJej6nh_k9dYlvNWaZ3DLPKSYg

- Newsletters https://1drv.ms/f/s!AlkpZJej6nh_k-RIHMWezhAd7fONHg

- PC-CIMI Proof of Concept http://wiki.hl7.org/index.php?title=PC_CIMI_Proof_of_Concept

- Work Breakdown MPP https://1drv.ms/u/s!AlkpZJej6nh_k9dK5WOB8zkkUuaKgA

- SNOMEDCT: https://confluence.ihtsdotools.org/display/DOCECL/Expression+Constraint+Language+-+Specification+and+Guide

- and http://ihtsdo.org/index.html ?

Attachment

The community discussed, via email, the pros and cons of separating FINDINGSs into ASSERTION (e.g., patient has diabetes mellitus) and EVALUATION_RESULT (e.g., Systolic blood pressure of 120mmHg) patterns. The following discussions show the ambiguity and challenges of the concepts and terms being harmonized:

Keith Campbell states that ASSERTION and EVALUATION_RESULT are sometimes clinically indistinguishable and should be treated as one (e.g., OBSERVATION or EVALUATION_RESULT).

Gerard Freriks, concurs with Stan; where, he prefers one pattern internally (e.g., OBSERVATION or EVALUATION_RESULT). Gerard points out that how users want to see the data depicted on the screen is their (implementers) choice, e.g., a red Ferrari keeps its “color = red”.

- Gerard is confused about what FINDING, ASSERTION and EVALUATION, which are statements about Patient systems or other processes.

- To Gerard ASSERTIONs are OBSERVATIONs meaning things that are observed by a human in the patient system or other processes; where, we are using our senses.

- To Gerard an EVALUATION is an Assessments/Inference of the Patient system or other processes. They are generated using our brains and existing implicit and explicit knowledge.

- To Gerard ASSERTIONs are OBSERVATIONs meaning things that are observed by a human in the patient system or other processes; where, we are using our senses.

- In Gerard’s opinion, we need:

- Planning of actions, setting goals, they can relate to the Patient system or other processes and the brain is used

- Ordering of actions, this can relate to the Patient system or other processes and the brain is used.

- Execution of a process: data generated during execution related to the Patient system or other processes, such as:

- Process of OBSERVATION: Lab test result

- Process of Assessment: Lab test result suggests a set of possible disease as the cause

- Process of Planning: A goal is set; a course of action is documented: medication and new tests are ordered

- Process of Ordering: Medication is ordered

- Process of Execution: when the order is executed data is collected about times of administration or side effects noted, etc.

- Process of OBSERVATION: new test results are observed

- Process of Assessment: Lab test shows normalization

- Process of Planning: next steps, actions, discharge letter, etc.

- Gerard thinks that 5 or 6 generic Processes need to be modelled when the assessment about the Patient system is called an Inference about the Patient system; where, some physical OBSERVATIONs need a lot of interpretations, assessments. But, since these OBSERVATIONs start with the use of our biological senses these are OBSERVATIONs.

- Examples are: Chest sounds, bowel sounds, heart sounds, palpations, estimations of size

- Gerard notes we document the provision of Healthcare. This places the healthcare provider in the center of any EHR.

- the healthcare provider is using his senses and brain.

- the healthcare provider decides what to document about the Patient System.

- All items (statements) are ASSERTIONs in an EHR.

- How should we model ‘FACT’, a slippery term; where, EHR records are subjective opinion; and where,

- Things observed use the healthcare provider’s senses and thoughts.

- A second dichotomy is: a new information item (Statement) and a re-used one.

- A third dichotomy is: clinical data about the patient system and about other administrative, process logic related items (statements)?

- Gerard does not like terms like: FACT, ASSERTION; rather, we need terms that describe the three dichotomies. Plus, we need a Documentation Model that supports the clinical treatment process: OBSERVATION - Assessment - Planning - Ordering (treatment)- Execution

- An example about the Eye is an analog to the boundary problem between structure and coding system; where, we must make a choice to express the same thing in one single pattern. And we must leave it to the software/screen builders to generate either option.

- In summary, Gerard agrees that the terms used are not well defined (definable). Since we are having this discussion, it proves that something is wrong. Gerard’s recommends:

- take the healthcare provider (HcP) as documenting point of reference; where, the HcP uses his senses and brain and documents what he has perceived and thought of in a Statement

- the focus of the HcP’s Statements are either about perceived phenomena or about processes (Patient System, organizational, administrative, knowledge management) as Assessments/Inferences

- OBSERVATION: perceived phenomena in either the Patient System, or other organizational, administrative, knowledge management processes

- ASSESSMENT/INFERENCE: thoughts about either the Patient System, or other organizational, administrative, knowledge management processes, using Observations, implicit and explicit information

- PLAN: decisions, to do lists, procedures, guidelines, that can be ordered and impact organizational, administrative, knowledge management processes

- ORDER: decisions implying factual instructions to be executed and that impact either the Patient system (treatment), or organizational, administrative, knowledge management processes

- EXECUTION DATA: data generated during the execution of a process. These are phenomena that can be perceived.

Walter Sujansky, supports Keith; where, he provides an engineering perspective, rather than an epistemological/ontological perspective. Considering that our goal is to build *machines* (software) that serve as *tools* for health care providers, ontological distinctions only matter if they materially influence (1) the ease with which such machines are built or maintained, (2) the correct functioning of such machines given their requirements, and (3) the value such machines provide when used as tools by health care providers. Walter has found it’s useful, when discussions drill down to subtle distinctions of semantics, to step back and consider whether and how such distinctions impact any of these engineering considerations.

- Based on those criteria, Walter agrees with those discussants who question the validity of distinguishing among “ASSERTIONs”, “EVALUATIONs”, “FINDINGs”, “OBSERVATIONs”, “FACTs”, “JUDGEMENTs”, etc. from a *semantic* perspective. When it comes to software that stores and reasons over information about individual patients, each of these notions essentially refers to some “BELIEF” about the state of a patient that was derived from some signal in the real world (let’s call it “data”), interpreted in some manner by an agent (either a person or an instrument), and then recorded in the information system using some representation scheme.

- “BELIEF” may not be the perfect term for this more abstract notion, but, (1) it’s different than the other terms that have been used in the discussion and the model under design to date, and (2) it appropriately connotes the subjective nature of all information stored in healthcare software systems.

- In this sense, information systems never contain purely objective “data”, per se, but only the recorded interpretations of data. All terms listed in caps above are subjective representations (BELIEFS) about a patient’s state in reality. As examples, consider the following BELIEFs about a patient that may be stored in an information system and later used for reasoning by humans or software:

- Patient X has a fasting blood glucose measurement of 9.4 mmol/L [even this entails the interpretation of a physical blood sample by a man-made instrument that applies certain analytical/interpretive techniques]

- Patient X has a Type II diabetes

- Patient X has a first-degree relative with Type II diabetes

- Patient X has a probability of coronary artery disease > 35%

- Patient X has a papular rash

- Patient X has a Braden score of 7

- etc.

- Some of these beliefs are more subjective than others, and it may be important to represent in the information system the degrees of subjectivity or uncertainly corresponding to each, because that may need to be taken into considering when human users or decision-support algorithms reason over the recorded BELIEFs. However, I would posit that representing the “subjectiveness” or uncertainly of BELIEFs by simply categorizing them as “ASSERTIONs” versus “EVALUATIONs” or “FACTs” vs. “JUDGEMENTs” is a blunt instrument that is neither necessary nor sufficient to support reasoning; where, it is the support of reasoning by a person or a machine that is the only important consideration in how these different types of BELIEFs are represented in the information system. At the simplest level, such reasoning by a machine might consist of the following rule:

- If Patient X has Y, then Z { some other BELIEF about Patient X } , where Y is some BELIEF about Patient X

- From a semantic point of view, whether the BELIEF “Y” is categorized as an ASSERTION, EVALUATION, FACT, or JUDGEMENT doesn’t seem to matter in applying the rule above.

- Finally, when it comes to discussion of entity/attribute/value triplets versus other ways of modeling BELIEFs, these seem to be standard engineering issues of alternative ways to model data in software systems and databases, rather than issues with semantic import. The semantics of the BELIEF and its implications in human or machine reasoning are the same, whether a BELIEF is modeled as

- the unary predicate Diagnosis-Patient-X (Type_II_Diabetes) or

- the binary predicate Patient X (has_diagnosis, Type_II_Diabetes) or

- the ternary predicate Belief (Patient_X, has_diagnosis, Type_II_Diabetes)

- From an engineering perspective, however, it certainly does matter how different beliefs are modeled in the information system because the implemented reasoning mechanism must be able to pattern-match against BELIEFs accurately in order to draw inferences correctly. Specifically, if a rule is modeled as “Patient-X(has_diagnosis, Type_II_Diabetes) => Patient-X(needs_HgbA1c)”, then a belief modeled as “Belief(Patient_X, has_diagnjosis, Type_II_Diabetes)” will fail to match for technical reasons (not because the semantics of the BELIEF in the database don’t match those in the rule’s antecedent). So, the data-modeling discussion is important from an engineering perspective, but I don’t believe it’s useful or important to draw distinctions between ASSERTIONs and so between binary predicates and EAV triplets from a semantic/ontological/epistemological perspective, as some of the discussion to date has endeavored to do. Semantically, they are all equally BELIEFs.

Stan Huff states that the ASSERTION and EVALUATION_RESULT distinction, of FINDING, is structurally aligned with clinical practices. An ASSERTION is a natural statement for a clinician to mean the implied definition of a term (e.g., the patient has diabetes; where, diabetes means a set of commonly understood clinical EVALUATIONs), while EVALUATION_RESULT is in the form of a question and answer; where, the question is typically a LOINC term and the answer is typically a SNOMED response (e.g. Q: “Does the patient have an Skin Ulcer Risk?”, A: the Braden score is 7, indicating a high risk). Stan notes that ASSERTIONs can be converted to EVALUATIONs; but, the reverse is not always true, which implies OBSERVATION or EVALUATION_RESULT can be the singular machine representation suggested by Gerard.

Thomas Beale supports a separation of EVALUATION_RESULT (which he assumes means FACT or OBSERVATION here) and ASSERTION (opinions of various kinds). The former state real things observed in the world; the latter are inferences. In the clear majority of cases, the difference is obvious. Confusing facts and inferences isn't likely to be a good idea in the kinds of intelligent computing environments we are aiming for. Thomas points out a confusing kind of statement that many have trouble with this classification; where, an OBSERVATION is converted to a score value, e.g. breathing OBSERVATIONs are classified into 0, 1, or 2 per Apgar criteria; and where, the classification system (typically a score result like Apgar, Braden scale, Waterlow, Barthel, GCS etc) is acting as an inference engine converting OBSERVATIONs to EVALUATIONs, according to a set of fixed rules. So, clinical statements based on these score systems can become EVALUATIONs (as Stan noted above), but others see them as surrogates for bands of OBSERVATION values. The diagram appears to take the former line with the Braden score = 7 as an EVALUATION.

- Thomas observes that if EVALUATIONS (measurement results) and ASSERTIONS (classifications) are the two reliable types of clinical statements relating to OBSERVATION, he thinks 'EVALUATION' and 'ASSERTION' are not good name; because, he is not clear where OPINIONs and DIAGNOSIS, ORDER and REPORT OF ACTION PERFORMED go. An analog is the EP approach, which is a common in ontology representations, which may or may not deal with what we think of as values. EAV is more common in data modelling, since values are everything. So, the EA part of an EAV representation normally wants to be mapped to the EP structure of relevant ontologies.

Richard Esmond points out that one of the challenges that constantly crops up around this topic is nomenclature. Certain words / phrases are such a common part of our life that it's nearly impossible to not gravitate towards them - so they get reused in confusing ways. Clinicians have confusing ways to deal with realities and ways they talk about it; where, CIMI must disambiguate as much as possible and allow things to be stored in the EHR using single patterns and not competing ones. (confusing ones).

- ASSERTION and EVALUATION_RESULT are a perfect example. These discussions on the ASSERTION / EVALUATION_RESULT boundaries are not testable, as suggested by Stan or follow Keith’s recommendation to NOT use ASSERTION and EVALUATION.

- Having said that, Richard also agrees with Thomas’s comments about the very real-world distinction between 'fact-ish things' and 'judgement-ish things'.

- If an O2 sensor logs a data-point, it might have made an error (such as being disconnected), but even an erroneous reading is an important data-point. It's a data-point that records the fact that the O2 sensor wasn't working. So, I think of this type of information as 'fact-ish'.

- A clinician recording a diagnosis of depression is a good example of of 'judgement-ish' information. There isn't a simple blood-test for depression. A diagnosis would be made based on the preponderance-of-evidence, all of which will be subjective. I think of this information as 'judgement-ish'.

- Richard agrees with Thomas's point that these two scenarios are very different, but Richard’s understanding of the difference between ASSERTIONs and EVALUATION_RESULT doesn't involve the 'fact-ish' / 'judgement-ish' boundary. He understands the difference between the ASSERTION-pattern and EVALUATION-pattern being related to how the 'question' is being defined.

- The ASSERTION-pattern relies on a certain level of inherent understanding of the question being asked being pre-coordinated into the focus concept.

- An ASSERTION-pattern example: {Fracture of femur | 71620000}

- All by itself, this concept-value implies something about the question being asked. If this concept-value (all by itself) is inserted into a patient’s medical record a certain implication could be assumed: They broke their leg. (I'll come back to presence / absence / certainty in a moment)

- An EVALUATION-pattern example: {August 24 1980}

- This is obviously a date-value... but there is nothing inherent within the date-value to imply that it reports 'the data of X'. It could be a birth-date, admission-date or the date a patient died. This value needs a computable definition of the question being asked because by itself is insufficient as a data-point with computable meaning. And the same holds true for Positive / Negative lab-results, or unit-based values, IE - {25.3 ml/dl} only has meaning when paired with a question (an observable entity concept) for which it is the answer.

- As a summary of Richard’s view... the ASSERTION / EVALUATION_RESULT nomenclature is used within CIMI discussions to refer to whether or not the question is implied in the concept of the answer. But, even in the case of ASSERTIONs, we are looking to add additional 'modifiers' and 'qualifiers' to both the ASSERTION-pattern and EVALUATION-pattern to document Presence / Absence, Method, Certainty, Risk, Criticality, etc. And this is where I would assume the 'fact-ish-ness' and 'judgement-ish-ness' is most appropriately recorded. (correct me if I'm wrong)

- Richard makes one last comment on what he believes Keith's is referring to when Keith makes the point that an ASSERTION / EVALUATION_RESULT doesn’t have to be differentiated.

- The ASSERTION-pattern is based on the premise that an addition concept-id isn't necessary to define the question being asked - but that doesn't mean you couldn't assign a single-concept for 'FINDING-ASSERTION' and provide that each time - which would turn ASSERTIONs into EVALUATIONs. So, now you just have one kind of 'thing' to diagram.

- Richard personally agrees with his point. From an engineering perspective, the code is nearly identical, the code would simply look for the 'FINDING-ASSERTION' concept and branch. The functional difference at run-time is still there, but the diagrams that we draw grow somewhat less complex.

Claude Nanjo believes that judgement-ish type qualifiers - e.g., who asserted this fact, the supporting evidence/data, etc... - should probably be defined in specializations of the core pattern or included in the core pattern but left for archetypes to leave it in our out. If we favor the former and wish to avoid design by constraint, then the current model needs to be modified since right now it includes both types of qualifiers. Stan and Susan were exploring more appropriate specializations of these patterns.]

- Claude agrees though the fact that it is a judgement-ish thing does not necessarily force you towards an EVALUATION_RESULT or ASSERTION pattern - even though, in this case, the ASSERTION pattern is more natural - i.e., I classify this patient as a member of patients with schizo-affective disorders vs mental disorder (or whatever is the right key/characteristic) = schizo-affective (the answer).] Claude thinks we need two representations because one form is generally more natural than the other in specific contexts. Typically, measurements follow the EVALUATION_RESULT pattern rather than the assertion pattern. On the other hand, classifications into cohorts so to speak, tend to fall more in the assertion camp and would look somewhat unnatural in the EVALUATION_RESULT format (though, as Keith points out, it can be done).

- Claude points out that it is important to note that at the level of the reference model, the distinction between EVALUATION_RESULT s and ASSERTIONs is primarily structural. An EVALUATION_RESULT captures information that needs to pair a key (question, etc...) with a result (answer, etc...). That information may be fact-ish or judgement-ish. In an ASSERTION, the concept being asserted is not paired with a result. Whether it is fact or judgement becomes relevant at the archetype level. For instance, CIMI would define specific archetypes to express, say, an encounter diagnosis on top of an ASSERTION pattern. This approach is taken because of the fuzziness of the boundary between OBSERVATIONs and conditions in FHIR at the core resource level. It is different for different people. The reality is that most fact-ish things will probably be represented using the EVALUATION_RESULT pattern - e.g., some patient characteristic and a value for that characteristic. Most judgement-ish things will probably be represented using the ASSERTION pattern. However, both structures can be interconvertible in some cases: Eye color = brown vs 'Has brown eye color'.]

- Claude notes a related question is whether it's desirable to pre-coordinate meaning related to the 'Question' into the answer-concept such as 'Family history of X' or 'on examination - X'. Pre-coordination is useful if you are stuck with an architecture that desires a single concept-id, like most legacy EMRs, but annoying otherwise. Claude Nanjo feels, on this topic, the preferred CIMI models/archetypes will not pre-coordinate but iso-semantic representations that prefer pre-coordination in the code can. In CIMI, the ASSERTION is the TOPIC and the family history modifier is part of the (situation with explicit CONTEXT's) CONTEXT. The Clinical Statement combines the TOPIC with the CONTEXT to represent a situation with explicit CONTEXT]

Michael van der Zel recognizes the Figure 3 distinction between ASSERTION and EVALUATION, from his experience; where, in a current project, they model this as either an "interpreted" or "raw data" OBSERVATION. The first is more of an EVALUATION_RESULT (interpreted by a human or machine!) and the second is more ASSERTION (by human senses or senor devices / lab / imaging / DNA).

Jay Lyle reiterates Stan’s point that it is possible to represent ASSERTIONs as EVALUATIONs, but there is more than one way to do it. Richard’s suggestion is that the question is some consistent value like “ASSERTION of” and the answer is the FINDING value we previously had in ASSERTION. Others suggest that the FINDING is the question, and the answer is either the presence concept or possibly a count. We will need to identify a criterion for determining whether to keep ASSERTION or merge it with EVALUATION, and another criterion for determining what EVALUATION_RESULT pattern to adopt.

- Jay observes regarding the ASSERTION/EVALUATION_RESULT terminology, if there is a terminology already more broadly established in some domain to describe this distinction, it might be useful to use that. His search has been unsuccessful. Perhaps the entity approach Thomas suggested could help (especially if there were some existing reference) -- though using “property” and “attribute” to make the distinction may be confusing to some. Adopting one pattern sounds like a desideratum, but one to be weighed against another: to capture data close enough to user form to avoid the need for excessive transformation; where, mixed internal representation will be a source of confusion; better to convert all data to the same underlying form.

- Jay Lyle argues that Family Hx. should be an ASSERTION. Thomas Beale states that statements in a Family History are literally facts, i.e. reports of OBSERVATIONs e.g. mother had breast cancer, but are chosen and recorded because they act as surrogate statements of risk, e.g. of the risk of daughter getting breast cancer. As such they act as EVALUATIONs. There is thus a pattern such that an EVALUATION_RESULT about risk for patient P can be formulated in terms of an OBSERVATION about Q (some other entity). A normal EVALUATION_RESULT takes the form Obs + KB => Inference, where KB = knowledge base. In a Family History, usually just the OBSERVATION is recorded, because the very fact of the OBSERVATION being about another entity (normally a biological relative) and the stated genetic relation (mother or whatever) directly implies a possible future diagnosis of the same kind of OBSERVATION for the patient. Jay Lyle is not sure if Patient Obs. is an ASSERTION or an EVALUATION; where, Complaint would be an ASSERTION, while a Physical Exam OBSERVATION could be either an ASSERTION (vital sign, ROS) or an EVALUATION_RESULT (lesion, breath sounds).

- In an earlier draft version of this diagram, Jay Lyle removed Condition between ASSERTION and the skin ulcer risk; because, he didn’t think we have any distinguishing characteristic that makes it necessary to have a “condition” – in fact, he think having it reintroduces a complication we’re better off handling with the “concern” decoration, i.e., while I think this captures a common usage, what people call conditions are FINDINGs that someone is concerned about – you can’t categorically classify any specific FINDING as a condition.

- Thomas suggests If 'Condition' is to appear in statements, he would expect it to appear in an EVALUATION_RESULT (usually a Dx) that asserts the presence of the condition based on various supporting OBSERVATIONs. Hence 2h OGTT sample of >7mmol/l blood glucose => diabetes, where 'diabetes' is the name of a real process asserted to exist in the patient. Thomas does not understand what 'ACT', 'TOPIC' or the relation between them (no matter which way around it is) represent.

- Jay makes the following clarifications

- is-about links: this is the “TOPIC” association. The TOPIC is what the statement is about -- here, an ACT or a FINDING

- Thomas thinks that by 'TOPIC' we probably mean just 'entity', since 'TOPIC' is likely to be a relative/subjective concept (whether something is a 'TOPIC' in a discourse is likely to depend on many things). He suggests 'FINDING' probably means 'state' i.e. state of a Continuant at some time.

- ASSERTION & EVALUATION_RESULT have nothing to do with objectivity: they are patterns. EVALUATION_RESULT is a question & answer (like a LOINC-supported value); ASSERTION is a unary ASSERTION like an SCT FINDING value; but, Jay suggests calling FINDING something else in CIMI to distinguish it from SCT FINDING, which aligns semantically with the value half of EVALUATION’s LOINC question and SNOMED answer value pair.

- But, Jay also notes that “there is a distinction in the concept of ‘attribution’ that still escapes him”

- Thomas says, in that case he doesn't understand the choice of terminology at all, Thomas suggests that normally, one wants to adopt one or other representational style and stick with it throughout any system as did Gerard. At an interface one can imagine having to convert. But in any case, the way to think about these terms might be:

- 'ASSERTION': a predicate represented as a single atom - corresponds to an Entity/Property metaphysics where all things are either Entities or Properties of an Entity. Hence a Ferrari that is Red, i.e. has a Property of Red color.

- 'EVALUATION': a predicate represented as a binary structure - corresponds to an Entity/Attribute/Value (EAV) metaphysics, where the world is described in terms of Entities, their Attributes, and the Values of those Attributes. Hence, a Ferrari whose Color = Red.

- CONTEXT is instance-specific information (aka meta-data), such as for a planned procedure, CONTEXT is scheduled time, resources, etc. and for an observed fact, CONTEXT might be the patient, time, etc. Provenance data (who recorded what, when, where, how etc.) is contained by the Clinical Statement. Thomas Beale argued before that he thinks the word 'CONTEXT' should be limited to situational CONTEXT, i.e. when, where and who at the instance level. The utility of this CONTEXT information is to help uniquely identify or key the clinical statement in time and space.

- Thomas argues that provenance is about who said each statement in the real world (also at the instance level). This is distinct from who recorded it into the information system, normally regarded as an IS audit concept, not a real-world provenance concept.