CIMI Telecom Minutes 2017-01-26

- WIKI URL: http://wiki.hl7.org/index.php?title=Clinical_Information_Modeling_Initiative_Work_Group

- Wiki Objective: maintain and communicate current information with CIMI members, partners and stakeholders.

- CIMI Co-chairs: Stan Huff, Linda Bird, Galen Mulrooney, Richard Esmond

- WIKI Curator: Stephen.Hufnagel.HL7@gmail.com

- CIMI Telecoms: Thursdays at 3PM EST (4PM EDT), https://global.gotomeeting.com/join/754419973 US Dial-in 224-501-3316, Code: 754-419-973

- HL7 List server: CIMI@lists.HL7.org; where, you must be subscribed to the CIMI@lists.hl7.org list server to use it.

- REQUESTED ACTION: Edit this WIKI page or send your feedback to CIMI@lists.HL7.org with your comments, questions, suggested updates.

- Screen Sharing & Telecom Information

- https://global.gotomeeting.com/join/754419973 Dialin: United States : +1 (224) 501-3316 Access Code: 754-419-973

- More phone numbers

- Australia : +61 2 8355 1034

- Belgium : +32 (0) 28 93 7002

- Canada : +1 (647) 497-9372

- Denmark : +45 89 88 03 61

- Netherlands : +31 (0) 208 084 055

- New Zealand : +64 4 974 7243

- Spain : +34 932 20 0506

- Sweden : +46 (0) 853 527 818

- United Kingdom : +44 (0) 330 221 0098

Contents

- 1 Attendees

- 2 Related Document

- 3 Annotated Agenda (Minutes)

- 4 Association vs. EvaluationResult criteria

- 4.1 Jay's COMMENTS and QUESTIONS

- 4.2 Joey's COMMENTS

- 4.3 Gerard's ISSUES & QUESTION

- 4.4 2017-01-26 Action-01 (Stan): Flush out ASSERTION & EVALUATION_RESULT example spreadsheet

- 4.5 2017-01-26 Action 02 (Steve): Document ASSERTION vs. EVALUATION_RESULT criteria

- 4.6 Proposed Post-Meeting Action: Vote to add above to CIMI Principles.

- 5 Proposed Assertion-PostalAddress

Attendees

Linda Buhl, Joey Coyle, Richard Esmond, Gerard Freriks, Bret Heale, Stan Huff, Steve Hufnagel, Patrick Langford, Jay Lyle, Susan Matney, Galen Mulrooney, Claude Nanjo, Craig Parker, Serafina Versaggi

Related Document

Annotated Agenda (Minutes)

Italicized lines were discussed

- Record this call

- Agenda review (Richard & Steve's suggested topics were added

- Updates on active projects (standing item)

- Skin and wound assessments – Jay and Susan

- FHIM – CIMI integration - Galen

- Conclusions from the CIMI FHIM Task Force – Stan Huff

- Stan will update the document based on previous discussion, and bring the document back for review and approval in a few weeks (still not done)

- Steve: Is this OBE? There is a Final Report at: https://1drv.ms/w/s!AlkpZJej6nh_k9dQ2qQnRuQM8gbu8A

- Conversion of CIMI archetypes to FHIR logical models to FHIR profiles – Claude

- LOKI – Patrick is still working

- BMM parsing and serialization code – Claude

- Creating ADL models from CEMs

- First recreate CEMs from BMM and ADL files

- Second – CEMs to BMM and ADL files

- Updates on HL7 Vocabulary WG threads - Richard

- Terminology & ValueSet Publishing

- Terminology Binding

- ValueSet Expansion

- Review plans and action items from San Antonio – Steve, All

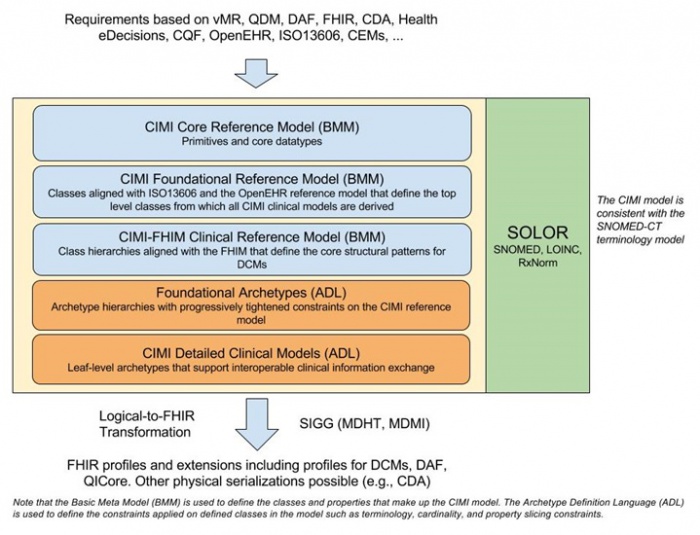

- FHIR Management Group requested justification for CIMI PSS being Universal Realm; where, the bulk of our work appears US centric (SNOMED, LOINC, RxNorm (SOLOR) in particular).

- Review of assertion/evaluation example table format - Stan

- Motion: That we create an informative ballot for May. Moved by Jay, second by Claude. Affirm: all, Neg: 0, Abstain: 0.

- Review CIMI models from January comment only ballot – Claude, All

- Continued modeling (discussion) of assertions if time allows – Stan

- Future topics

- Review CIMI Observation Result pattern

- Harmonization of CIMI and FHIR datatypes - Richard

- How will CIMI coordinate with DAF? - Claude

- Granularity of models (schematic anchors) – from Richard

- We need a way to identify the focal concept in indivisible and group statements

- We would probably use the new metadata element

- New principle: Don’t include static knowledge such as terminology classifications in the model: class of drug, invasiveness of procedure, etc.

- Proposed policy that clusters are created in their own file – Joey, Stan

- The role of openEHR-like templating in CIMI’s processes - Stan

- Model approval process

- What models do we want to ballot?

- IHTSDO work for binding SNOMED CT to FHIR resources – Linda, Harold

- Which openEHR archetypes should we consider converting to CIMI models?

- Model transformations

- Transform of ICD-10 CM to CIMI models – Richard

- Requirements and tactical plan (Who does what) for May Ballot content

- Requirements and strategic Plan for how CIMI tooling can improve (make consistent) and integrate with current FHIR web-based tooling - Steve

- Requirements and strategic Plan for Streamline process to produce FHIR Profiles and Extensions - Steve, Galen, Claude

- E.g., Is there an expedited UML tool path to FHIR Structure Definitions, as recommended by Michael van der Zel; where, tooling can also produce ADL.

- Requirements and strategic Plans for CIMI Clinical Knowledge Manager (CKM) before we create stovepipe solutions - Steve

- Others?

- Any other business

Association vs. EvaluationResult criteria

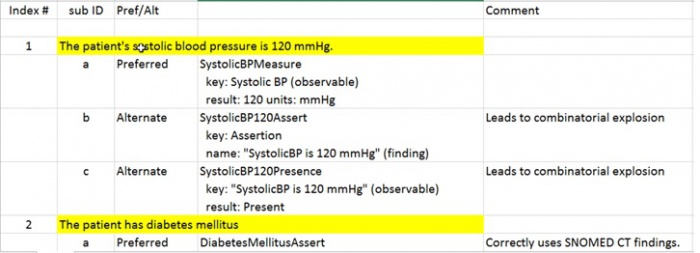

Stan presented the following ASSERTION and EVALUATION_RESULT structure example, from an Excel Spreadsheet, for workgroup discussion. Claude suggested separate PROS and CONS columns to justify why we selected the "Preferred" representation structure. Stan accepted the action to add the PROS and CONS columns and to continue adding illustrative examples.

- media: Model_Options_and_Examples_2017-01-26.xlsx <-- Click to download.

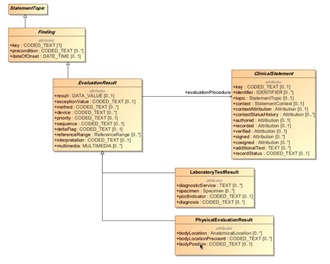

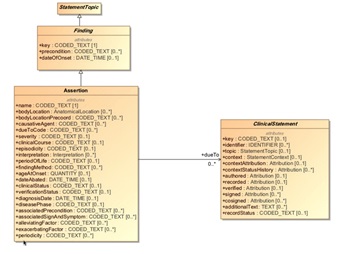

Claude presented the following EVALUATION_RESULT and ASSERTION Patterns; where, he asked how we might make them equivalent and round-trip transformable. Stan Huff pointed out that, in practice, these structural patterns are used in different contexts, have different attribute requirements and do not have to be round-trip transformable. We discussed why categories, such as subjective or objective criteria, do NOT universally work in "edge cases". Stan verbally gave his ASSERTION and EVALUATION_RESULT structure selection guidelines, which Steve volunteered to document for workgroup review.

Jay's COMMENTS and QUESTIONS

- See http://wiki.hl7.org/index.php?title=CIMI_WGM_Agenda_January_2017#Thursday_Q1

- Use “EVALUATION_RESULT” to distinguish from the act of evaluation.

ASSERTION is analogous to the SNOMED CT FINDING in the patient; where, we make presence, timing, and subject explicit, as in the SNOMED CT SITUATION model.

- Key = Assertion;

- Value = Diabetes (finding), corresponds to Situation;

- subject = patient,

- context = present;

- associated finding = diabetes.

EVALUATION_RESULT is analogous to the SNOMED CT OBSERVABLE concept (or LOINC codes); where, this is the evaluation of a property in the patient.

- Key = Blood Type (Observable);

- Value = B negative

QUESTIONS

- The question is not what the fact “is,” but how do we represent it

- Do OBSERVABLES need a specific range?

- Can an OBSERVABLE have multiple findings (pulse rate and pulse strength)

- Are a Waveform's frequency, amplitude, shape OBSERVABLES? We think so.

- Can many OBSERVABLES be baked into the same ASSERTION, such as wound characteristics?

- Does Stan's guideline define the preferred structure for:

- Patient characteristic vs whole patient.

- Category. (e.g., drug injector question)

- Can we use Queries to differentiate a table of examples & edge cases.

- If answer is Boolean or Present/Absent --> YES, use ASSERTION

- case: "absent risk".

- Multiple context values or

- pre-coordinated; or

- other approach]

- We want a single representation that can be reliably used for both cases.

- EVALUATION_RESULT to FINDING is easy:

- FIMDING of blood type of B negative.

- Systolic Blood Pressure is 131 (unlikely but unambiguous)

- ASSERTION to EVALUATION_RESULT can be harder and is not supported by CIMI patterns

- In order to logically transmute Finding of Diabetes into an evaluation, not only do we need a corresponding Observable concept, but the Finding concept or Observable concept needs to be defined in terms of the other.

- EVALUATION_RESULT to FINDING is easy:

- More questions

- can we negate loss of consciousness within a present head injury?

- The situation model only has one site for Finding Context.

- These have to be separate Situation using the current model;

- their relationship lives in CIMI, not the ontology.

- PenRad using CliniThink, which uses attribute chaining rather than role groups, apparently successfully.

- EvaluationResult has Method; how do you do that in Assertion?

- Assertion has [property]; how do you represent that in an EvaluationResult?

- can we negate loss of consciousness within a present head injury?

Joey's COMMENTS

Sent: Friday, January 27, 2017 10:43 AM Subject: Re: CIMI meeting 26-1-2017: Assertion

- Stan has talked about Assertion and Evaluation as 2 'modeling styles'.

- Gerard defined ‘assertion’ : a confident and forceful statement about a fact or belief.

- I think the Assertion / Evaluation distinction does conform to Gerard's definition, when one focuses on 'modeling style', and not the resulting data instances.

- The Evaluation modeling style is NOT a "confident and forceful statement about a fact or belief." Since the valueset has many options, the eye color could still be blue, brown, green.

- The Assertion modeling style is confidently and forcefully saying this model has to represent blue eye color.

- I will agree though that once a data instance is created for either of these and stating "blue eye color", they are a equally confident and forceful statement about a fact or belief.

- Thus I feel the terms Stan is using for the 2 modeling styles follows your definition for the 'modeling style', but yes I agree this distinction disappears once you create the instances from these models.

- That being said, I'm sure if we can find better terminology for these modeling styles, Stan would welcome that.

Assertion Style

BlueEyeColor

key isA_Assertion_code value blue_eye_color_code

Evaluation Style

EyeColor

key isA_Eye_color_code value any eye color code ( from valueset of all eye colors )

Gerard's ISSUES & QUESTION

- Sent: Friday, January 27, 2017 9:41 AM, Subject: CIMI meeting 26-1-2017: Assertion

I have serious problems with the current line of thinking about the use of the term ‘Assertion’ and ‘Finding’; where, it is impossible for me to grasp the difference between Assessment and Finding.

- When I look up the meaning of ‘assertion’ I read: a confident and forceful statement about a fact or belief.

- To me any statement is therefor an assertion; where, ‘Finding’ is an assertion as well.

- To me we need only 5/6 kinds of statements as main patterns, where, they correspond to a common, accepted, clinical model:

- Clinical model documentation process phases:

- Observation of phenomena that result from a process. Human senses are used.

- Assessment of a process. When the process is one in the Patient System it is called Diagnosis. Implicit and explicit resources are used. Mental processes/CDS play a role.

- Planning of the execution of a process. Mental processes/CDS play a role.

- Ordering the execution of a process. When the target is a process in the Patient System we call it treatment, or it can be the ordering of Observations. Mental processes/CDS play a role.

- Execution of the process. e.g. lab tests can generate a lot of detailed additional information.

- Clinical model documentation process phases:

- Each statement is about processes, that can be located in the Patient System or in other more administrative processes

- The documentation in the phases above is a process as well.

- Any statement can be used in:

- any of the Clinical model phases

- relation to processes in the Patient System or any administrative process and,

- any interface

- All Statements have the pattern:

- Key, Result

- Context (why, who, when/where, how)

- additional metadata that indicate:

- the clinical process phase it is used in

- the kind of process

- the kind of interface

- The additional metadata indicate how the statement is used in the documentation process.

- The context defines the context of that what is documented.

- The examples as provided by Stan, in his spreadsheet:

- 1a: Key=SystolicBPMeasuremet (when it is a new observation)

- Result= 120 mmHG

- ClinicalProcessPhase: Observation

- Target: Patient System

- Context: …

- When this Observation is re-used the target = the process that is observed.

- 4a: Key=Diagnosis (when it is a new assessment of a Patient System)

- Result= DiabetesMellitus

- ClinicalProcessPhase: Diagnosis

- Target: PatientSystemProcess

- Context: …

- When this Assessment is not a de novo fact but re-used the target = the administrative process that is observed

- 4b: Key=Diagnosis (when it is a new assessment of a Patient System)

- Result= DiabetesMellitus

- ClinicalProcessPhase: Execution

- Target: AdministrativeDischargeProcess

- The search for the Key/Result pair gives all. Some additional attributes are needed to end in all FinalDiagnosis in a Discharge letter or all de novo registrations of the diagnosis.

- When this Assessment is not a de novo fact but re-used the target = the administrative process that is observed

- Any statement as Modifiers:

- present or absent

- certainty (uncertain, …, certain)

- Status (no result, intermediate result, final result)

- Observe I split presence and certainty because they are not the same concepts.

- Observe that I use a very specific Status at the semantic level of Statements.

- The examples in the spreadsheet are about wether the datum is present or not in the technical interface. This handled outside of the statement. In 13606 it is coded via structural attributes in the RM.

SPREADSHEET QUESTION: How can you create a CIMI pattern that expresses that: blood pressures above 130 mmHg are Not Present?

2017-01-26 Action-01 (Stan): Flush out ASSERTION & EVALUATION_RESULT example spreadsheet

- add PROS and CONS columns to justify "Preferred" representation

- add additional illustrative example

- Response to Action-01 Updated Model Options-and-Examples Spreadsheet

2017-01-26 Action 02 (Steve): Document ASSERTION vs. EVALUATION_RESULT criteria

Result to Action-02

- Stan stated: 'Reasoning' vs. 'Finding', 'Subjective' vs. 'Objective', 'Fact-ish' vs. 'Judgement-ish' categories work > 80% of the time; but, they are not reliable criteria for a small set of "edge cases'.

- Stan proposed:

- In most cases it is obvious, when to use ASSERTION (e.g., 'Reasoning', 'Subjective', 'Judgement-ish' things, such as DIAGNOSIS, PROBLEM, COMPLAINT, FAMILY_HISTORY)

- The Patient DISCHARGE_DIAGNOSIS is ... (implying the set of findings defining the DISCHARGE_DIAGNOSIS)

- John has blue eyes

- In the small number of cases, where it is not obvious when to use ASSERTION, CIMI prefers

- ASSERTIONS be used when the answer to an EVALUATION_RESULT is 'Present' or 'Absent', 'Yes' or 'No', 'True' or 'False', etc.

- Although theoretically possible; but for practical implementation reasons, CIMI does not support round-trip conversion between EVALUATION_RESULT and ASSERTION structures, as shown by the set of ATTRIBUTE vs. EVALUATION_RESULT attributes shown above.

- In most cases it is obvious, when to use ASSERTION (e.g., 'Reasoning', 'Subjective', 'Judgement-ish' things, such as DIAGNOSIS, PROBLEM, COMPLAINT, FAMILY_HISTORY)

Proposed Post-Meeting Action: Vote to add above to CIMI Principles.

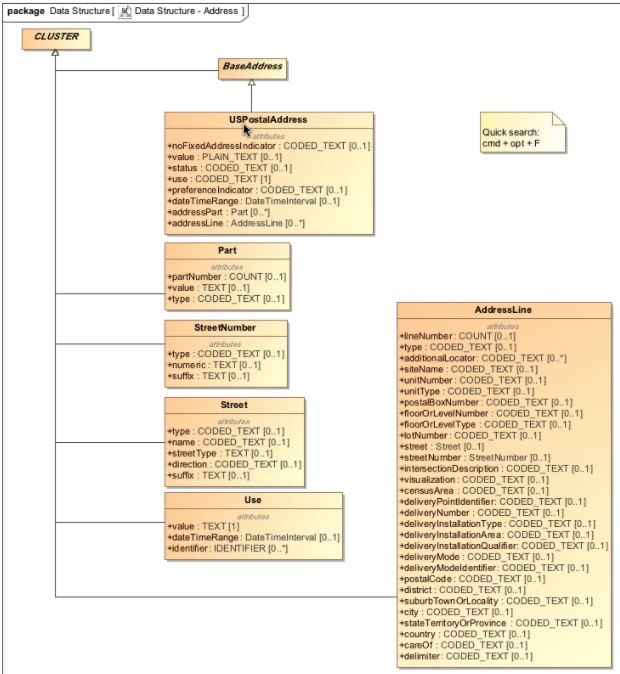

Proposed Assertion-PostalAddress

- Claude presented the following two figures; where, the first figure shows the current CIMI BMM layers and the second figure shows a US and Canada centric ADDRESS Pattern; and where, Claude asked should country specific addresses (e.g., Japan) be a layer between layer 4 and 5 or integrated into layer 4. Galen suggested we follow the FHIM approach to have an abstract address class with country specific sub-classes. Stan concurred with Galen and recommended that they be integrated into layer 4; because, if subclass attributes migrate to the abstract address class or visa-versa they will remain consistent. Claude took the action to add an abstract address class.

2017-01-26 Action-03 (Claude): Add ADDRESS abstract class in Foundational Architypes BMM layer

- Stan: Keep country specific address classes in Foundational Architypes BMM layer

- Action-03 Result