Difference between revisions of "Assessment Scales"

| Line 1: | Line 1: | ||

There is work underway for a new R-MIM on assessment scales. | There is work underway for a new R-MIM on assessment scales. | ||

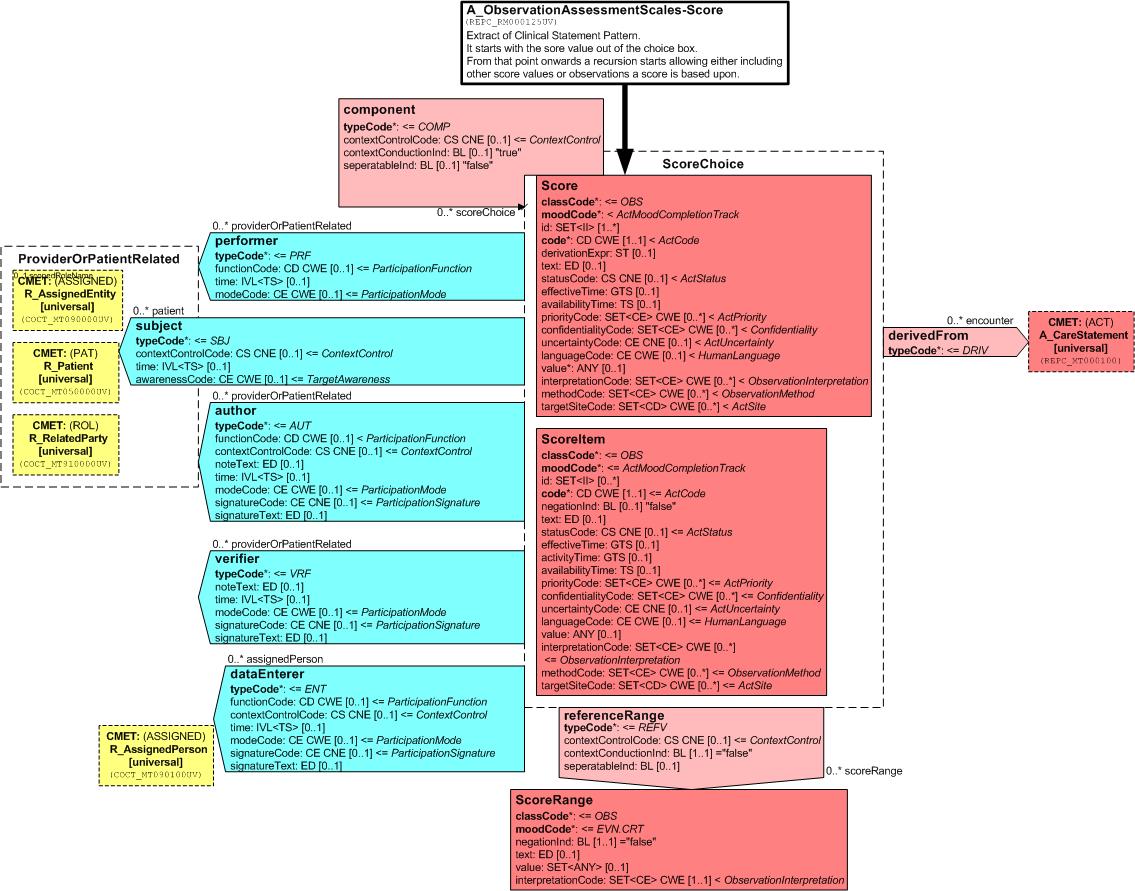

[[Image:REPC_RM000125UV-AssessmentScales-ScoresUpdatedQ2VancWedn.jpg]] | [[Image:REPC_RM000125UV-AssessmentScales-ScoresUpdatedQ2VancWedn.jpg]] | ||

| + | |||

| + | |||

| + | A_ObservationAssessmentScales-Scores (REPC_RM000125UV) | ||

| + | |||

| + | Frank Oemig, William Goossen, 2008 | ||

| + | |||

| + | Updated version 2 from Vancouver discussion | ||

| + | |||

| + | This R-MIM will be worked upon the next couple of months in order to get it into ballot asap. | ||

| + | |||

| + | The paper below will be organized according to the following three components: | ||

| + | |||

| + | 1. Rationale for paying attention to assessment scales / scores / indexes as a separate topic | ||

| + | |||

| + | 2. The HL7 v3 ballot material persé: use cases, storyboards, dynamic model, static R-MIM model. | ||

| + | |||

| + | 3. Instructions for use to define assessment scale as Detailed Clinical Model, including the coding of it, and use it against the care statement / assessment scale R-MIM. | ||

| + | |||

| + | Further it will deal with interpreting the score values and with implementing it in CDA documents and messages. | ||

| + | |||

| + | |||

| + | This R-MIM for assessment scales or scores is an improved version of REPC_RM000120UV). | ||

| + | Diagram | ||

| + | |||

| + | Click thumbnail above to open larger graphic in a new window | ||

| + | |||

| + | Description | ||

| + | Parent: Care Structures Event Statement (REPC_RM000100UV) | ||

| + | |||

| + | Assessment Scales – Scores Structure Overview: | ||

| + | Given the interest in earlier versions of the Care Provision Ballot, further work has been undertaken on representing assessment scales, scores or indexes. The scientific testing of such instruments puts specific requirements and constraints on its use in HL7 v3 message structures in order to not only have a semantically equivalent data exchange, but also to keep the clinimetric characteristics of the instruments as a whole. | ||

| + | The assessment scales, scoring systems or indexes are observations with specific characteristics. They can consist of severity classification systems or point totals systems that pretend to make a | ||

| + | quantitative statement on the severity and prognosis of a disease or other aspect of human functioning. It is quite often an attempt to convert ‘soft’ observations into ‘hard’ data and evidence. Most of such instruments used in healthcare have been tested extensively on validity, reliability and usability. Many of such scoring instruments are used for decades and worldwide. | ||

| + | Typically, assessment scales or scores combine the findings of individual values into a total score which can be interpreted more easily against a reference population. In the simplest case, it is just a single value or based on two individual values, in a complex case it can consist of several dozen values which are combined using a complex mathematical calculation or statistical technique. | ||

| + | |||

| + | This R-MIM tries to give guidelines on the representation in information models, in particular against the HL7 clinical statement pattern and the care statement R-MIM for care provision. | ||

| + | |||

| + | In addition to the information model, the appropriate use of terminology and consistency between the information model and the terminology model are crucial for safe exchange of such observations. | ||

| + | |||

| + | Walkthrough | ||

| + | The following information are of interest for the exchange of information on assessment scales / scores: | ||

| + | Code (how to identify the data?) | ||

| + | Codesystem (what is the originating catalog?) – LOINC, alternatively Snomed CT, | ||

| + | if a licences is available | ||

| + | name + description (short textual description) | ||

| + | value (information) | ||

| + | unit | ||

| + | interpretation | ||

| + | In addition to this information for an individual score the underlying values used for calculation are of importance as well. But these are based on the same 6 points mentioned above. | ||

| + | |||

| + | Care Statement extract | ||

| + | The R-MIM for the assessment scales – scores can be seen as an extract of Clinical and Care Statement Pattern, thus inheriting its characteristics. The R-MIM representation starts on top with the name and identification in the entry-point. For a detailed explanation of all attributes and vocabulary, the reader is referred to generic descriptions of the Reference Information Model, and to the walkthrough of the care statement D-MIM. | ||

| + | Score Observation | ||

| + | The R-MIM then starts with the score observation out of the choice box. | ||

| + | As said above, the crucial attributes include: | ||

| + | |||

| + | • code to use a code to identify the variable and in which also the code system can be listed. | ||

| + | • text attribute to allow the name and description of the assessment instrument | ||

| + | • value in which the actual score and where applicable the unit can be entered | ||

| + | • interpretationCode where the interpretation of the value can be explained via codes, often against a reference value In the derivation code the total score for the assessment scale is recorded. In other words: derivation method is for example to add all values of the separate scores towards the total score. | ||

| + | • Effective time is the point in time on which the instrument is scored. In order to be valid and reliable, the scoreItems need to have (almost) the same time stamp. Such constraints posed by assessment instruments are important and expand beyond the mere semantic interoperability. For the receiver it is not only important to understand what the message content means, but also be ascertained that the data are collected based on the guidelines for the instrument. | ||

| + | • In the value of the score, which was created by adding the individual score items, is filled up. This is often a Coded Ordinal data type because it is mandatory to score and fill up all items to get a proper sum score. That is why Mandatory (1..1) component relations often exist for scoring instruments. | ||

| + | • Of course all relevant attributes can be applied as needed | ||

| + | |||

| + | Component relationship to ScoreItem Observation | ||

| + | |||

| + | From that point onwards a recursion starts based on the component relationship, allowing either including other score values or observations a score is based upon. Beneath the score Observation is the Observation (OBS) with the name Score item. Both the class Score and the class ScoreItem have class code = OBS. | ||

| + | |||

| + | Derived from relationship to Care Statement CMET | ||

| + | |||

| + | The derived from relation to care statement allows the user to describe any relevant information related to why a score item is scored this way. It allows to express any human thoughts as observations to go alongside with the score itself, or any circumstances as a procedure being carried out, or a specific encounter when it was measured. The relationship is to the choice box, allowing to make such statements on the level of the assessment instrument as a whole, or for an individual scoreItem. | ||

| + | |||

| + | |||

| + | Linkages to subject and provider roles | ||

| + | |||

| + | < to be completed> | ||

| + | |||

| + | |||

| + | |||

| + | The score systems can be divided into different categories: Those with arbitrary scores, those with discrete values and those with an unspecified number of values. Patient Classification Systems for workload are examples of the first one, the Barthel index is an example of the second category, the BMI (Body Mass Index) and example for the third. | ||

| + | |||

| + | Arbitrary scores | ||

| + | |||

| + | A scale is a set of linear values from a certain range. A reference range can be determined. In this case, an interpretation according to the conventional scheme is possible, i.e. a value is interpreted as "normal" if it is within the area: | ||

| + | |||

| + | However, it should be noted here that an interpretation as too low is not necessarily combined with the abnormal flag. | ||

| + | |||

| + | Discrete values (scores) | ||

| + | |||

| + | The normal range and the number of all possible values are identical. So for example, | ||

| + | the Barthel Index allows for the values of 0 to 100 in steps of 5. The reference range, which can be specified, is within the same range, so that the available assessment opportunities (Abnormal flags) are not usable. The interpretation of a score is depending on a part of the reference range to which it belongs. Hence, the referenceRange relationship to another OBS with the name ScoreRange. | ||

| + | |||

| + | For example: | ||

| + | |||

| + | |||

| + | Interpretation shows how the total score should be interpreted. For instance for the Dutch version of the Barthel index, this is 0-9 for seriously limited, 10-19 for moderately limited and 20 for independent. | ||

| + | Finally the ScoreChoice box has participations with subject and author as explained in detail in the clinical statement pattern. | ||

| + | As mentioned above, an assessment of the results by the conventional scheme of a | ||

| + | reference range, where values outside are too high or too low, does not to apply. All | ||

| + | values are falling into the range as defined by the score system. This range is - as | ||

| + | explained above - to be divided into sections, which then can be evaluated/interpreted | ||

| + | separately. Each score system is going to have its own (new) set of interpretation values. | ||

| + | Each score system should get its own set of interpretation values which can be defined | ||

| + | according to the following scheme. If the code values are coming from different code | ||

| + | systems, then they must be included: | ||

| + | |||

| + | Table : Interpretation of results | ||

| + | Eventually, some score systems may share the same interpretations. | ||

| + | |||

| + | Contained Hierarchical Message Descriptions | ||

| + | |||

| + | |||

| + | Care Structures Event Assessment Scale | ||

| + | REPC_HD000125UV | ||

| + | |||

| + | |||

| + | |||

| + | Further walkthrough to be determined. | ||

Revision as of 19:30, 25 September 2008

There is work underway for a new R-MIM on assessment scales.

A_ObservationAssessmentScales-Scores (REPC_RM000125UV)

Frank Oemig, William Goossen, 2008

Updated version 2 from Vancouver discussion

This R-MIM will be worked upon the next couple of months in order to get it into ballot asap.

The paper below will be organized according to the following three components:

1. Rationale for paying attention to assessment scales / scores / indexes as a separate topic

2. The HL7 v3 ballot material persé: use cases, storyboards, dynamic model, static R-MIM model.

3. Instructions for use to define assessment scale as Detailed Clinical Model, including the coding of it, and use it against the care statement / assessment scale R-MIM.

Further it will deal with interpreting the score values and with implementing it in CDA documents and messages.

This R-MIM for assessment scales or scores is an improved version of REPC_RM000120UV).

Diagram

Click thumbnail above to open larger graphic in a new window

Description Parent: Care Structures Event Statement (REPC_RM000100UV)

Assessment Scales – Scores Structure Overview: Given the interest in earlier versions of the Care Provision Ballot, further work has been undertaken on representing assessment scales, scores or indexes. The scientific testing of such instruments puts specific requirements and constraints on its use in HL7 v3 message structures in order to not only have a semantically equivalent data exchange, but also to keep the clinimetric characteristics of the instruments as a whole. The assessment scales, scoring systems or indexes are observations with specific characteristics. They can consist of severity classification systems or point totals systems that pretend to make a quantitative statement on the severity and prognosis of a disease or other aspect of human functioning. It is quite often an attempt to convert ‘soft’ observations into ‘hard’ data and evidence. Most of such instruments used in healthcare have been tested extensively on validity, reliability and usability. Many of such scoring instruments are used for decades and worldwide. Typically, assessment scales or scores combine the findings of individual values into a total score which can be interpreted more easily against a reference population. In the simplest case, it is just a single value or based on two individual values, in a complex case it can consist of several dozen values which are combined using a complex mathematical calculation or statistical technique.

This R-MIM tries to give guidelines on the representation in information models, in particular against the HL7 clinical statement pattern and the care statement R-MIM for care provision.

In addition to the information model, the appropriate use of terminology and consistency between the information model and the terminology model are crucial for safe exchange of such observations.

Walkthrough The following information are of interest for the exchange of information on assessment scales / scores: Code (how to identify the data?) Codesystem (what is the originating catalog?) – LOINC, alternatively Snomed CT, if a licences is available name + description (short textual description) value (information) unit interpretation In addition to this information for an individual score the underlying values used for calculation are of importance as well. But these are based on the same 6 points mentioned above.

Care Statement extract The R-MIM for the assessment scales – scores can be seen as an extract of Clinical and Care Statement Pattern, thus inheriting its characteristics. The R-MIM representation starts on top with the name and identification in the entry-point. For a detailed explanation of all attributes and vocabulary, the reader is referred to generic descriptions of the Reference Information Model, and to the walkthrough of the care statement D-MIM. Score Observation The R-MIM then starts with the score observation out of the choice box. As said above, the crucial attributes include:

• code to use a code to identify the variable and in which also the code system can be listed. • text attribute to allow the name and description of the assessment instrument • value in which the actual score and where applicable the unit can be entered • interpretationCode where the interpretation of the value can be explained via codes, often against a reference value In the derivation code the total score for the assessment scale is recorded. In other words: derivation method is for example to add all values of the separate scores towards the total score. • Effective time is the point in time on which the instrument is scored. In order to be valid and reliable, the scoreItems need to have (almost) the same time stamp. Such constraints posed by assessment instruments are important and expand beyond the mere semantic interoperability. For the receiver it is not only important to understand what the message content means, but also be ascertained that the data are collected based on the guidelines for the instrument. • In the value of the score, which was created by adding the individual score items, is filled up. This is often a Coded Ordinal data type because it is mandatory to score and fill up all items to get a proper sum score. That is why Mandatory (1..1) component relations often exist for scoring instruments. • Of course all relevant attributes can be applied as needed

Component relationship to ScoreItem Observation

From that point onwards a recursion starts based on the component relationship, allowing either including other score values or observations a score is based upon. Beneath the score Observation is the Observation (OBS) with the name Score item. Both the class Score and the class ScoreItem have class code = OBS.

Derived from relationship to Care Statement CMET

The derived from relation to care statement allows the user to describe any relevant information related to why a score item is scored this way. It allows to express any human thoughts as observations to go alongside with the score itself, or any circumstances as a procedure being carried out, or a specific encounter when it was measured. The relationship is to the choice box, allowing to make such statements on the level of the assessment instrument as a whole, or for an individual scoreItem.

Linkages to subject and provider roles

< to be completed>

The score systems can be divided into different categories: Those with arbitrary scores, those with discrete values and those with an unspecified number of values. Patient Classification Systems for workload are examples of the first one, the Barthel index is an example of the second category, the BMI (Body Mass Index) and example for the third.

Arbitrary scores

A scale is a set of linear values from a certain range. A reference range can be determined. In this case, an interpretation according to the conventional scheme is possible, i.e. a value is interpreted as "normal" if it is within the area:

However, it should be noted here that an interpretation as too low is not necessarily combined with the abnormal flag.

Discrete values (scores)

The normal range and the number of all possible values are identical. So for example, the Barthel Index allows for the values of 0 to 100 in steps of 5. The reference range, which can be specified, is within the same range, so that the available assessment opportunities (Abnormal flags) are not usable. The interpretation of a score is depending on a part of the reference range to which it belongs. Hence, the referenceRange relationship to another OBS with the name ScoreRange.

For example:

Interpretation shows how the total score should be interpreted. For instance for the Dutch version of the Barthel index, this is 0-9 for seriously limited, 10-19 for moderately limited and 20 for independent.

Finally the ScoreChoice box has participations with subject and author as explained in detail in the clinical statement pattern.

As mentioned above, an assessment of the results by the conventional scheme of a

reference range, where values outside are too high or too low, does not to apply. All

values are falling into the range as defined by the score system. This range is - as

explained above - to be divided into sections, which then can be evaluated/interpreted

separately. Each score system is going to have its own (new) set of interpretation values.

Each score system should get its own set of interpretation values which can be defined

according to the following scheme. If the code values are coming from different code

systems, then they must be included:

Table : Interpretation of results Eventually, some score systems may share the same interpretations.

Contained Hierarchical Message Descriptions

Care Structures Event Assessment Scale

REPC_HD000125UV

Further walkthrough to be determined.