Persistence Models versus Interoperability Models

Back to AID.

This page is an initial draft, and as such it needs a lot more work. Please feel free to add your thoughts or concerns.

Contents

Summary

HL7 has the methodology in place to create 'models for interoperability'. RIMBAA implementers that deal with RIM-based persistence have to deal with 'models for persistence'. These are not the same (see example below).

Questions:

- Do we need to add 'persistence models' to the HL7 methodology? What use would a software developer have of such a model?

Note: there are other issues (not just context conduction as used in the example below) which cause the need for the persistence model to be different from the 'model for interoperability'. Maybe a denormalization of datatypes. Maybe replacing all CMETs with their [universal] flavor. That is the subject of this issue - what are the differences bewteen the model for persistence and the model for interoperability? It's certainly not just context conduction, that's just a very intuitive initial example. Rene spronk 18:12, 13 January 2011 (UTC)

Examples

The followinge examples illustrate how the 'model for interoperability' differ from the 'model for persistence'

Templates are 'for interoperability'

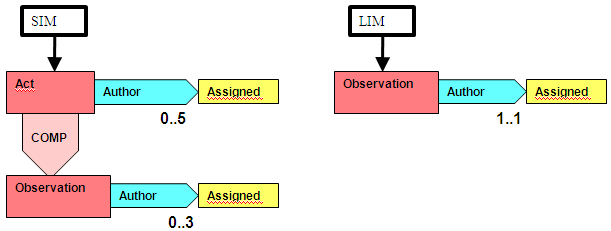

Example #1: (based on Context Conduction of participations, there are other kinds of examples). The models above are 'models for interoperability'.

- Let's assume that the SIM/R-MIM shown is a service payload model and that there are no wrappers. The instance on the wire may have, as shown above, [0..5] participations on Act, and [0..3] participations on Observation.

- On persisting this instance, context conduction may be decomposed (as described in this issue xxx), which means that the persisted object structure has [0..5] participations on Act, and [0..8] participations on Observations.

- The template/LIM shown above specifies a constraint on the SIM/R-MIM (Note: it applies to the Observation act class as shown in the left hand model). It is a constraint on a 'model of interoperability', and not on the 'model for persistence'.

- This is often ill understood within HL7 and by HL7 implementers. the template does NOT define that there will always be exactly 1 author for the Observation, because one could still inherit stuff from elsewhere in the model. If one were to query, at some later point in time, for Observations, and Observation is the only class of type Act in the response model, the query response is going to contain a maximum of 8 authors, and not a maximum of 3 as most people will be expecting.

- If the template applies, as stated above, then the cardinality (in the model for persistence) of the author participation on Observation is [1..6] (the template constrains the [0..3] cardinality).

Discussion:

- They could be the same if you just persist and don't proces. Do we assume that the interoperable models are always processed before persisted? -- Michael

- See Context Conduction in RIMBAA Applications: no, we're not assuming that one denormalizes the conduction prior to persisting the objects. I guess the term to use is to say that we're talking about a 'logical persistence model', quite how one implements that is another question. To me the 'logical persistence model' associated with the R-MIM of example 1 would show a fully denormalized version (with regards to context conduction). As such the persistence model (when it comes to context conduction) is a transform away from the R-MIM. Rene spronk

- Note: there are other issues (not just context conduction as used in the example above) which cause the need for the persistence model to be different from the 'model for interoperability'. Maybe a denormalization of datatypes. Maybe replacing all CMETs with their [universal] flavor. That is the subject of this issue - what are the differences bewteen the model for persistence and the model for interoperability? It's certainly not just context conduction, that's just a very intuitive initial example. Rene spronk 18:12, 13 January 2011 (UTC)

- What if we wanted to coinstrain, at the persistence layer, that a certain observation can only have 1 author? This type of constraint can't be expressed in an XML instance that's sent over the wire.

Observations on Entities

Capturing "approximate age of a house" or "tattoos of a person" at the persistence level violates the RIM because it requires a Role to be present. Especially the 'Identified' role class (which one could add to satisfy the RIM) doesn't add anything in terms of functionality.

- This could be an example where one would callapse an 'interoperability model' into a non-RIM conform persistence model.

HXIT

The HXIT data type allows one to send a history of things as part of the interoperability model. Persisting an instance of HXIT would probably never be done as-is, one would have to transform to a persistence model. Note that HXIT effectively dictates a special role of ControlActs in the pertsistence model.

Datatypes

One could persist the HL7 v3 datatypes as-is, or one could denormalize the content and persist the denormalized content. This is the subject of an upcoming whitepaper by Grahame.

- Example: consider the CD datatype

- CD is a flat model, where each CD has an OID for a code system and/or an OID for a value set

- very few users would implement a persistence model where code system is simply referenced as an OID, especially given OID mgmt issues

- most architects would normalise code systems in order to get functional and structural control over code system use within the system

- we ourselves would thoroughly normalise the codes as well as the code systems, and we'd probably have 20-30 tables to deal with CDs

- but it would be wrong to change the CD design for interoperability

- note that one could come up with other models for CD that are consistent with the abstract models, but include normalistion - but the more one does, the harder it gets to be truly consistent, and I'm not confident that there is any standardisation appropriate in this space

- this is a general issue, not only applicable to CD

- Example: the CS datatype

- Relies on context to know what the code system is. Database would have to store code system & value set OID. Especially if one supports multiple versions of the RIM.

Discussion

- Grahame http://www.marcdegraauw.com/2009/04/20/is-rimbaa-a-mistake/: The real problem with RIMBAA to me is that the RIM+DT+ontology is a model of interchange, not a model of management. It has a very observational view of the world (which does actually manifest in “everything is an observation” arguments, but this is the least important part of my intent here). It appropriately sweeps under the carpet all sorts of deep issues about how information should be stored to foster longitudinal management.

- Two instances off the top of my head:

- developers of real clinical applications are accustomed to the fun and games around patient identification, merging, and error management that occur in a large distributed environment. The rim has very little to say with tracking the details of this. The RIM itself is probably adaptable to this purpose (it’s just a grammar, after all), but there is no support in the structural vocab - you’d have to invent appropriate terms. So simple EHR’s may prosper, where these issues can be swept under the carpet, but as you scale, this would be very difficult. Also, the RIM stores are very denormalised with regard to storing identifiers - they are distributed across the entities rather than gathered in specific domain structures. For instance, there’s not even a single table of identifier types.

- the same applies to CD. CD is a very flat structure which is appropriate for interoperability. But for an actual working system, there’s no way I could imagine not normalising CD, and adding lots of extra information in order to make data entry and content management workable

- So I think that RIMBAA is very risky, both in terms of the design of what is already covered, and in terms of missing scope of the RIM. But with regard to the former, you could heavily normalise the persistence store while still following the sementics of the RIM.