Difference between revisions of "MIF based code generation"

Rene spronk (talk | contribs) |

Rene spronk (talk | contribs) |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | [[category: | + | [[category:Closed AID Issue]] |

==Summary== | ==Summary== | ||

| − | Code generation is a process whereby the source code (in a particular programming language) is automatically generated. It is an example of [[MDD|Model Driven Software | + | Code generation is a process whereby the source code (in a particular programming language) is automatically generated. It is an example of [[MDD|Model Driven Software Development]]. When it comes to [[code generation]] the best (most complete) code should be generated from the [[MIF]]. Code Generation tends to be a mechanism for the creation of most [[RIMBAA]] applications. |

*An alternative code generation method is [[Schema based code generation]]. | *An alternative code generation method is [[Schema based code generation]]. | ||

| Line 7: | Line 7: | ||

The primary source for the code generation is one of the options shown below: | The primary source for the code generation is one of the options shown below: | ||

#'''[[MIF]]''' - the code generator has to be aware of the structure of the MIF. MIF is the primary meta model format as used for HL7 version 3. | #'''[[MIF]]''' - the code generator has to be aware of the structure of the MIF. MIF is the primary meta model format as used for HL7 version 3. | ||

| − | #*See [[MIF]] for a more detailed description, as well as some notes | + | #*See [[MIF]] for a more detailed description, as well as some notes related to MIF-versioning and the current lack of a formal constraint language in HL7 v3. |

#'''alternate model representation format''' - the code generator has to be aware of the alternate model representation format (e.g. UML, inclusive of a few extensions that are v3 specific, EMF in Eclipse, OWL, and XML Schema). The alternate model representation format is a transformation of the MIF. | #'''alternate model representation format''' - the code generator has to be aware of the alternate model representation format (e.g. UML, inclusive of a few extensions that are v3 specific, EMF in Eclipse, OWL, and XML Schema). The alternate model representation format is a transformation of the MIF. | ||

| Line 20: | Line 20: | ||

#Target Model: the model one needs to support. For HL7 version 3 interactions this is the payload message type. | #Target Model: the model one needs to support. For HL7 version 3 interactions this is the payload message type. | ||

#*Could be either MIF or [[DSL]]. Mohawk and the NHS have tried to use a mixture of both -with the DSL based on business names of the classes-, but users found the mixture to be too confusing. One should either opt to use HL7 version 3 models or one should use a DSL (to hide the complexity of HL7 v3 models). | #*Could be either MIF or [[DSL]]. Mohawk and the NHS have tried to use a mixture of both -with the DSL based on business names of the classes-, but users found the mixture to be too confusing. One should either opt to use HL7 version 3 models or one should use a DSL (to hide the complexity of HL7 v3 models). | ||

| − | #* | + | #*It is recommended that one preserves all information in MIF into the code generation framework, including documentation. The documentation as present in the MIF is of help when using the generated code, and it can be used to create documentation (e.g. of the API) as well. (Mohawk and Philips Research have both done this). |

#Vocabulary: the management of vocabulary definitions and terminology services. This could be a third party terminology server, or a terminology manager with a HL7 [[CTS]] API. | #Vocabulary: the management of vocabulary definitions and terminology services. This could be a third party terminology server, or a terminology manager with a HL7 [[CTS]] API. | ||

| − | #Data types: a custom library with support for HL7 v3 data types R1 and/or R2. | + | #Data types: a custom library with support for HL7 v3 data types R1 and/or R2. There are interrelationships with the ''Vocabulary module'' when it comes to the C* datatypes. |

#*Currently there is no usable data types MIF file. A library to support the v3 data types has to be developed as a piece of custom code. | #*Currently there is no usable data types MIF file. A library to support the v3 data types has to be developed as a piece of custom code. | ||

#**A starting point could be the code generated from the datatypes.xsd XML schema file, the datatypes library as contained in the JavaSIG reference implementation (in Java), or the datatypes library defined by Mohawk (for .net). | #**A starting point could be the code generated from the datatypes.xsd XML schema file, the datatypes library as contained in the JavaSIG reference implementation (in Java), or the datatypes library defined by Mohawk (for .net). | ||

| − | #**Mohawk (Justin Fyfe): You can strip the data-types out of the Everest library, however you'll still need a formatter. At minimum, implementers will need MARC.Everest and MARC.Everest.Formatters.Datatypes.R1 . The reason being: The data-types aren't based on any one revision of the data-types, they are mixed between more than one version. So if you try to serialize them directly using XML serialization, you'll end up with something that isn't conformant. The effort to "extract" the data-types out of Everest completely would be very minimal (Adding XML Serialization Attributes for R1 or R2 attributes and getting rid of the supporting Everest interfaces, etc...)." (Duane Bender) By all means, use our library. It has been tested rather thoroughly internally and with a small number of outside partners and we support it aggressively when issues arise. | + | #**Mohawk (Justin Fyfe, March 2010): You can strip the data-types out of the Everest library, however you'll still need a formatter. At minimum, implementers will need MARC.Everest and MARC.Everest.Formatters.Datatypes.R1 . The reason being: The data-types aren't based on any one revision of the data-types, they are mixed between more than one version. So if you try to serialize them directly using XML serialization, you'll end up with something that isn't conformant. The effort to "extract" the data-types out of Everest completely would be very minimal (Adding XML Serialization Attributes for R1 or R2 attributes and getting rid of the supporting Everest interfaces, etc...)." (Duane Bender) By all means, use our library. It has been tested rather thoroughly internally and with a small number of outside partners and we support it aggressively when issues arise. |

| − | #**(Grahame) The data types as present in Eclipse OHF is an option, it is actually based on ISO 21090, but can read and write R1 as well as R2 data types. | + | #**(Grahame, March 2010) The data types as present in Eclipse OHF is an option, it is actually based on ISO 21090, but can read and write R1 as well as R2 data types. The datatypes in OHF were written from the start to not have any dependencies except on basic xml libraries etc - there are no eclipse dependencies and as such one should be able to extract the code. There is not very active support at this time. |

#Reusable model components: common class models (e.g. CMETs, wrappers) | #Reusable model components: common class models (e.g. CMETs, wrappers) | ||

#Serialization component: serializes RIM-based object structures according to the HL7 serialization specifications | #Serialization component: serializes RIM-based object structures according to the HL7 serialization specifications | ||

| Line 37: | Line 37: | ||

*Separating code for the wrappers from the code for the payload model. Wrapper models are shared by lost of interactions | *Separating code for the wrappers from the code for the payload model. Wrapper models are shared by lost of interactions | ||

**Mohawk: templates (generics) worked very well for reusing transmission and control act wrappers. Annotating the generalized class with attributes (or annotations) was a great way to pass hints onto the serializer. For example: Transport<ControlAct<Payload, ParameterList>> Was a good way to reuse the transport and control act wrappers while specializing the payload and parameterlist templates. | **Mohawk: templates (generics) worked very well for reusing transmission and control act wrappers. Annotating the generalized class with attributes (or annotations) was a great way to pass hints onto the serializer. For example: Transport<ControlAct<Payload, ParameterList>> Was a good way to reuse the transport and control act wrappers while specializing the payload and parameterlist templates. | ||

| − | *Creating a separate library with code to support CMETs. The library could make use of the fact that CMETs have a specialization hierarchy, i.e. CMETs with an identified flavour are a specialization of identified-confirmable, which in turn is a specialization of | + | *Creating a separate library with code to support CMETs. The library could make use of the fact that CMETs have a specialization hierarchy, i.e. CMETs with an identified flavour are a specialization of identified-confirmable, which in turn is a specialization of the universal flavour of the CMET. |

| + | **One could opt to replace all CMET flavours by a generic CMET flavour. If one has to support multiple v3 interactions it is likely that multiple flavors (variations with different levels of richness when it comes to attributes and classes) of on and the same CMET are being used. Prior to code generation one could replace the various flavors with the most generic flavor (known as the ''universal'' flavor) of the CMET. This process is possible because all CMET flavors are constrained versions of the universal flavor. | ||

| + | **This approach has the advantage of increased re-use of code; all CMET flavors can be processed by code generated based on the universal flavor. A disadvantage is the fact that when one uses the generated code to create/encode a v3 instance, one will have to create additional code (potentially generated from the MIF of a more constrained flavor of the CMET) to ensure that the serialization complies with the constraints of the CMET flavor used in the original interaction. | ||

| + | **Given that a typical application receives far more messages (different message types) than it sends (e.g. lots of systems receive ADT messages and send near to nothing) this may be a worthwile strategy for an implementer to pursue. Additional coding will be needed upon (or: prior to) serialisation to ensure that only those parts allowed by the original CMET flavor end up in the XML instance. | ||

*Code re-use for payload message types. If a MIF describes a CIM that is a specialisation/refinement of another CIM, the 'parent' CIM will be identified in the MIF of the refined model. | *Code re-use for payload message types. If a MIF describes a CIM that is a specialisation/refinement of another CIM, the 'parent' CIM will be identified in the MIF of the refined model. | ||

**Is it practical/feasable to use this for the generation of re-usable code? | **Is it practical/feasable to use this for the generation of re-usable code? | ||

===Serialization=== | ===Serialization=== | ||

| − | The generated code allows one to create object graphs that conform to one or more CIMs/LIMs. HL7 provides the serialization rules. These will need to be applied (like a transform) to the object graph in memory. | + | The generated code allows one to create object graphs that conform to one or more CIMs/LIMs. The main body of the software should work entirely in terms of high-level model-based and object-oriented terms. |

| + | |||

| + | HL7 provides the serialization rules. These will need to be applied (like a transform) to the object graph in memory. All XML serialization/deserialization and validation can in principle be automatically generated from proper high-level representations. | ||

The encoding is ITS specific. For example: the XML-encoding of HL7 v3 objects depends on the ITS used, either the [[XML ITS]] or the [[RIM ITS]]. The actual encoding and decoding in XML can be taken care of by the generated code. This has the advantage that as a programmer one doesn't have to worry about XML at all - one only has to deal with the object representation within an Object Oriented framework. | The encoding is ITS specific. For example: the XML-encoding of HL7 v3 objects depends on the ITS used, either the [[XML ITS]] or the [[RIM ITS]]. The actual encoding and decoding in XML can be taken care of by the generated code. This has the advantage that as a programmer one doesn't have to worry about XML at all - one only has to deal with the object representation within an Object Oriented framework. | ||

| Line 58: | Line 63: | ||

Implementers have a couple of options: | Implementers have a couple of options: | ||

#Create a new MIF based on the Visio file of the old artefact (if available). The serialized XML probably doesn't conform to the normative schema used by the project, this can be taken care of by XSLT. | #Create a new MIF based on the Visio file of the old artefact (if available). The serialized XML probably doesn't conform to the normative schema used by the project, this can be taken care of by XSLT. | ||

| − | #*Note that over the years the schema generator tool has been updated to fix some issues, and the automatic naming scheme for relationships was changed as well. These are the main cause of non-backwards compatible changes. | + | #*Note (1) that over the years the schema generator tool has been updated to fix some issues, and the automatic naming scheme for relationships was changed as well. These are the main cause of non-backwards compatible changes. |

| − | #Use the MIF from a recent normative edition for an artefact that is similar in nature to the one used in the project. The serialized XML probably doesn't conform to the normative schema used by the project, this can be taken care of by XSLT. | + | #Use the MIF from a recent normative edition for an artefact that is similar in nature to the one used in the project. The serialized XML probably doesn't conform to the normative schema used by the project, this can be taken care of by XSLT. Also see note (1) above. |

| − | |||

#Reverse engineer the MIFs from the XML schema. This is only an option for those that have sufficient knowledge of MIF to build the transform from schema to MIF. A full population of the MIF won't be possible, at a minimum details of the coding systems used, and the conformance of attributes and relationships (mandatory/required) will have to be manually added. The XML schema don't contain the necessary information, it would have to be derived from the (textual) documentation. | #Reverse engineer the MIFs from the XML schema. This is only an option for those that have sufficient knowledge of MIF to build the transform from schema to MIF. A full population of the MIF won't be possible, at a minimum details of the coding systems used, and the conformance of attributes and relationships (mandatory/required) will have to be manually added. The XML schema don't contain the necessary information, it would have to be derived from the (textual) documentation. | ||

==Discussion== | ==Discussion== | ||

Latest revision as of 19:57, 7 October 2015

Contents

Summary

Code generation is a process whereby the source code (in a particular programming language) is automatically generated. It is an example of Model Driven Software Development. When it comes to code generation the best (most complete) code should be generated from the MIF. Code Generation tends to be a mechanism for the creation of most RIMBAA applications.

- An alternative code generation method is Schema based code generation.

Analysis

The primary source for the code generation is one of the options shown below:

- MIF - the code generator has to be aware of the structure of the MIF. MIF is the primary meta model format as used for HL7 version 3.

- See MIF for a more detailed description, as well as some notes related to MIF-versioning and the current lack of a formal constraint language in HL7 v3.

- alternate model representation format - the code generator has to be aware of the alternate model representation format (e.g. UML, inclusive of a few extensions that are v3 specific, EMF in Eclipse, OWL, and XML Schema). The alternate model representation format is a transformation of the MIF.

Overview

MIF based code generation has the benefit that it is based on the normative and full specification of a HL7 v3 model, inclusive of documentation. MIF based code generation has the disadvantage that cross-industry tools can't be used.

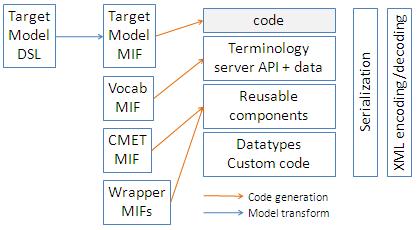

The figure below shows some of the functional programming units contained in RIMBAA applications.

The programming units include:

- Target Model: the model one needs to support. For HL7 version 3 interactions this is the payload message type.

- Could be either MIF or DSL. Mohawk and the NHS have tried to use a mixture of both -with the DSL based on business names of the classes-, but users found the mixture to be too confusing. One should either opt to use HL7 version 3 models or one should use a DSL (to hide the complexity of HL7 v3 models).

- It is recommended that one preserves all information in MIF into the code generation framework, including documentation. The documentation as present in the MIF is of help when using the generated code, and it can be used to create documentation (e.g. of the API) as well. (Mohawk and Philips Research have both done this).

- Vocabulary: the management of vocabulary definitions and terminology services. This could be a third party terminology server, or a terminology manager with a HL7 CTS API.

- Data types: a custom library with support for HL7 v3 data types R1 and/or R2. There are interrelationships with the Vocabulary module when it comes to the C* datatypes.

- Currently there is no usable data types MIF file. A library to support the v3 data types has to be developed as a piece of custom code.

- A starting point could be the code generated from the datatypes.xsd XML schema file, the datatypes library as contained in the JavaSIG reference implementation (in Java), or the datatypes library defined by Mohawk (for .net).

- Mohawk (Justin Fyfe, March 2010): You can strip the data-types out of the Everest library, however you'll still need a formatter. At minimum, implementers will need MARC.Everest and MARC.Everest.Formatters.Datatypes.R1 . The reason being: The data-types aren't based on any one revision of the data-types, they are mixed between more than one version. So if you try to serialize them directly using XML serialization, you'll end up with something that isn't conformant. The effort to "extract" the data-types out of Everest completely would be very minimal (Adding XML Serialization Attributes for R1 or R2 attributes and getting rid of the supporting Everest interfaces, etc...)." (Duane Bender) By all means, use our library. It has been tested rather thoroughly internally and with a small number of outside partners and we support it aggressively when issues arise.

- (Grahame, March 2010) The data types as present in Eclipse OHF is an option, it is actually based on ISO 21090, but can read and write R1 as well as R2 data types. The datatypes in OHF were written from the start to not have any dependencies except on basic xml libraries etc - there are no eclipse dependencies and as such one should be able to extract the code. There is not very active support at this time.

- Currently there is no usable data types MIF file. A library to support the v3 data types has to be developed as a piece of custom code.

- Reusable model components: common class models (e.g. CMETs, wrappers)

- Serialization component: serializes RIM-based object structures according to the HL7 serialization specifications

- XML encoding/decoding: (assuming one uses a XML-based ITS) The encoding of a serialized RIM based object graph in XML, or the decoding thereof into an object structure. The encoding rules are specified by the ITS (e.g. XML ITS or RIM ITS)

Code Re-use

Suppose one has to generate code for 10 different (but related) HL7 v3 interactions. each of those interactions consists of two wrappers (Transmission Wrapper and ControlAct Wrapper) and may reference a number of CMETs. If one doesn't optimize for code re-use each and every interaction MIF will produce code for the wrappers.

Re-use of code can be based on

- Separating code for the wrappers from the code for the payload model. Wrapper models are shared by lost of interactions

- Mohawk: templates (generics) worked very well for reusing transmission and control act wrappers. Annotating the generalized class with attributes (or annotations) was a great way to pass hints onto the serializer. For example: Transport<ControlAct<Payload, ParameterList>> Was a good way to reuse the transport and control act wrappers while specializing the payload and parameterlist templates.

- Creating a separate library with code to support CMETs. The library could make use of the fact that CMETs have a specialization hierarchy, i.e. CMETs with an identified flavour are a specialization of identified-confirmable, which in turn is a specialization of the universal flavour of the CMET.

- One could opt to replace all CMET flavours by a generic CMET flavour. If one has to support multiple v3 interactions it is likely that multiple flavors (variations with different levels of richness when it comes to attributes and classes) of on and the same CMET are being used. Prior to code generation one could replace the various flavors with the most generic flavor (known as the universal flavor) of the CMET. This process is possible because all CMET flavors are constrained versions of the universal flavor.

- This approach has the advantage of increased re-use of code; all CMET flavors can be processed by code generated based on the universal flavor. A disadvantage is the fact that when one uses the generated code to create/encode a v3 instance, one will have to create additional code (potentially generated from the MIF of a more constrained flavor of the CMET) to ensure that the serialization complies with the constraints of the CMET flavor used in the original interaction.

- Given that a typical application receives far more messages (different message types) than it sends (e.g. lots of systems receive ADT messages and send near to nothing) this may be a worthwile strategy for an implementer to pursue. Additional coding will be needed upon (or: prior to) serialisation to ensure that only those parts allowed by the original CMET flavor end up in the XML instance.

- Code re-use for payload message types. If a MIF describes a CIM that is a specialisation/refinement of another CIM, the 'parent' CIM will be identified in the MIF of the refined model.

- Is it practical/feasable to use this for the generation of re-usable code?

Serialization

The generated code allows one to create object graphs that conform to one or more CIMs/LIMs. The main body of the software should work entirely in terms of high-level model-based and object-oriented terms.

HL7 provides the serialization rules. These will need to be applied (like a transform) to the object graph in memory. All XML serialization/deserialization and validation can in principle be automatically generated from proper high-level representations.

The encoding is ITS specific. For example: the XML-encoding of HL7 v3 objects depends on the ITS used, either the XML ITS or the RIM ITS. The actual encoding and decoding in XML can be taken care of by the generated code. This has the advantage that as a programmer one doesn't have to worry about XML at all - one only has to deal with the object representation within an Object Oriented framework.

Alternate representation formats

Code generation based on an alternate model representation has the main advantage that one can use off-the-shelve cross-industry tools. One has to take however that all relevant information present in the MIF can be transformed to an expression in the alternate representation. It is not necessarily a requirement that the entire MIF contents be transformed into the alternate representation.

If we look at UML as the prime example of an alternate format: All HL7 concepts can be expressed in UML because of the fact that UML can be extended using something called a "UML profile". When RIM.coremif is imported all the HL7-specific extensions are imported into stereotype properties. Two profiles are being used: the HDF profile, and a RIM profile that allows the tracking of clones to the RIM, and the class/type and moodCode values for clone classes which are redundant to an audience outside HL7.

The XML schema (notably: the XML ITS schema as shipped with the publication) effectively also represents an alternate format representation. The transformation from MIF to XML schema is lossy in nature. See schema based code generation for details.

HL7 v3 Projects without MIFs

There are some HL7 version 3 based projects that don't have a published set of MIFs - they only have normative XML schema. These projects mostly date from the early days of HL7 version 3, when MIF had not established itself as the metamodel expression used for HL7 version 3. The Dutch NHIN (AORTA) is one of the projects in this category.

Implementers have a couple of options:

- Create a new MIF based on the Visio file of the old artefact (if available). The serialized XML probably doesn't conform to the normative schema used by the project, this can be taken care of by XSLT.

- Note (1) that over the years the schema generator tool has been updated to fix some issues, and the automatic naming scheme for relationships was changed as well. These are the main cause of non-backwards compatible changes.

- Use the MIF from a recent normative edition for an artefact that is similar in nature to the one used in the project. The serialized XML probably doesn't conform to the normative schema used by the project, this can be taken care of by XSLT. Also see note (1) above.

- Reverse engineer the MIFs from the XML schema. This is only an option for those that have sufficient knowledge of MIF to build the transform from schema to MIF. A full population of the MIF won't be possible, at a minimum details of the coding systems used, and the conformance of attributes and relationships (mandatory/required) will have to be manually added. The XML schema don't contain the necessary information, it would have to be derived from the (textual) documentation.